Compare commits

214 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

daebe83e32 | ||

|

|

7bafcdb3da | ||

|

|

2deb8e5b9d | ||

|

|

d7f2d9f58b | ||

|

|

42b7a51080 | ||

|

|

c4bba43667 | ||

|

|

3f176cda8d | ||

|

|

e00b4a503e | ||

|

|

30bdd6792c | ||

|

|

9c6e66f315 | ||

|

|

9010d16319 | ||

|

|

3ef7b10f5b | ||

|

|

3294463599 | ||

|

|

fc2d32e72d | ||

|

|

62ad8433bf | ||

|

|

66ec0a2d8d | ||

|

|

e767886565 | ||

|

|

00c3342b4d | ||

|

|

0e86e37235 | ||

|

|

1ac544ad78 | ||

|

|

c22d6dd1cc | ||

|

|

4113b1aacc | ||

|

|

501d4729d6 | ||

|

|

63f0eadb61 | ||

|

|

c110a71fff | ||

|

|

25803e660c | ||

|

|

7c6a4ed402 | ||

|

|

eb39850f63 | ||

|

|

409929841d | ||

|

|

7aeffacaa5 | ||

|

|

883982bf36 | ||

|

|

e78d979dd3 | ||

|

|

613f62e518 | ||

|

|

b3bf9fe670 | ||

|

|

39012d86d7 | ||

|

|

eafc2919c7 | ||

|

|

ee69c3aed2 | ||

|

|

a6b1c271ee | ||

|

|

cd90ac8e72 | ||

|

|

ade18bc11f | ||

|

|

4935936afc | ||

|

|

a59e33d241 | ||

|

|

d5e77d2c57 | ||

|

|

75c5615d10 | ||

|

|

b494b380db | ||

|

|

f412573972 | ||

|

|

ec52ad7636 | ||

|

|

3b3ca64296 | ||

|

|

61c26d7727 | ||

|

|

ea93b65ab7 | ||

|

|

c323068362 | ||

|

|

9008815790 | ||

|

|

99b52e5f19 | ||

|

|

f5abbd2e64 | ||

|

|

f32ece7788 | ||

|

|

d76b2ba8d2 | ||

|

|

93138c91e2 | ||

|

|

f0e7614443 | ||

|

|

d3911faebc | ||

|

|

584215b186 | ||

|

|

99c073d6da | ||

|

|

44304229cb | ||

|

|

381fcd710e | ||

|

|

c8b92ce4d5 | ||

|

|

d5cb191299 | ||

|

|

3f901c8692 | ||

|

|

e24577b663 | ||

|

|

e9d403493f | ||

|

|

fa7e3f3d95 | ||

|

|

05f40224b9 | ||

|

|

55e86ead02 | ||

|

|

515766452d | ||

|

|

dbe3488ad1 | ||

|

|

88e488bdc7 | ||

|

|

001f7414db | ||

|

|

1d65ed38f9 | ||

|

|

e1ea39e287 | ||

|

|

079d742ea0 | ||

|

|

733b137b2a | ||

|

|

1dad2bb791 | ||

|

|

840246bd77 | ||

|

|

4403a5c334 | ||

|

|

3d7b79fecd | ||

|

|

ba95b36e09 | ||

|

|

15471090d5 | ||

|

|

881feef4e9 | ||

|

|

c45f09be08 | ||

|

|

35648ba195 | ||

|

|

eaa50f83bc | ||

|

|

c4d3f7fd2a | ||

|

|

f0497bbbd4 | ||

|

|

47825ef2ad | ||

|

|

f3ce9e92f4 | ||

|

|

4e9e4f25cc | ||

|

|

d820d6462a | ||

|

|

87171cc880 | ||

|

|

9d53fc6055 | ||

|

|

8419d113f4 | ||

|

|

2173ab6775 | ||

|

|

bb67ac9b55 | ||

|

|

6d78104d03 | ||

|

|

812752972f | ||

|

|

5d53065519 | ||

|

|

0fd39e17b7 | ||

|

|

2b55458e30 | ||

|

|

d414eaec72 | ||

|

|

6334650d22 | ||

|

|

ccf57d9c5c | ||

|

|

a23520782c | ||

|

|

86698a50bb | ||

|

|

00c744c004 | ||

|

|

67dda78cbe | ||

|

|

62e0ec7ea4 | ||

|

|

b43d2a7567 | ||

|

|

b6c718a536 | ||

|

|

96d012a34b | ||

|

|

bd8d50bab9 | ||

|

|

d9055b5030 | ||

|

|

52b7d38df8 | ||

|

|

4d431f0476 | ||

|

|

caeeec803e | ||

|

|

2c4dc07d2d | ||

|

|

2234d31974 | ||

|

|

917e397c97 | ||

|

|

aa320386a2 | ||

|

|

2169f5498c | ||

|

|

dd5357b975 | ||

|

|

cb59805ff0 | ||

|

|

c708b29657 | ||

|

|

2d238ff6a2 | ||

|

|

7b778d6951 | ||

|

|

e4dbb323a5 | ||

|

|

f478a6e894 | ||

|

|

88a756fe50 | ||

|

|

ae2dfe59d9 | ||

|

|

fb1ade15b5 | ||

|

|

4ec389c449 | ||

|

|

cb5713216a | ||

|

|

3a79778ce4 | ||

|

|

81a1d2bb37 | ||

|

|

864ef41fef | ||

|

|

999a5094bb | ||

|

|

a1331536ca | ||

|

|

3795aa059e | ||

|

|

3c9b024e34 | ||

|

|

2ff2b7485e | ||

|

|

19dff5fdf2 | ||

|

|

7bfc184ee6 | ||

|

|

4954c18baa | ||

|

|

65a4649696 | ||

|

|

03233cf6be | ||

|

|

1176f61791 | ||

|

|

61f0674a13 | ||

|

|

364c68b138 | ||

|

|

1de802688c | ||

|

|

cf58f77400 | ||

|

|

b96e1b5466 | ||

|

|

b4073dfaa8 | ||

|

|

14ac30a79d | ||

|

|

d526e15fa8 | ||

|

|

f7de4fcbd9 | ||

|

|

27b6024d2a | ||

|

|

c145f92125 | ||

|

|

cde268c40e | ||

|

|

78bb92827b | ||

|

|

1a0fa0900d | ||

|

|

6f9a33d6b4 | ||

|

|

beb4ba512a | ||

|

|

712f825525 | ||

|

|

bf51c2948b | ||

|

|

40bae79a35 | ||

|

|

bf79d537d7 | ||

|

|

53a50efa00 | ||

|

|

bdd23c504c | ||

|

|

e98e3be33e | ||

|

|

5ed648bf0e | ||

|

|

b0dd622aa3 | ||

|

|

1ebfa0679e | ||

|

|

a3ea50401d | ||

|

|

21c0de6f77 | ||

|

|

254e2f1532 | ||

|

|

307c26ae50 | ||

|

|

4ccbe6fdd6 | ||

|

|

f8a05e535f | ||

|

|

08ba714637 | ||

|

|

7f495181a7 | ||

|

|

4c629721bd | ||

|

|

7e9fe17e76 | ||

|

|

56dc0824c8 | ||

|

|

cd16bf43bd | ||

|

|

23a0059e41 | ||

|

|

546af6e46b | ||

|

|

a6811aca6b | ||

|

|

d9b0c1da1c | ||

|

|

8930ff1c88 | ||

|

|

6cf2fb5490 | ||

|

|

14a585641c | ||

|

|

2a039150a8 | ||

|

|

b7dea68ff5 | ||

|

|

5605a6210f | ||

|

|

61a8a9c17b | ||

|

|

db73cd1cdd | ||

|

|

38335cbd4c | ||

|

|

6d50599ba0 | ||

|

|

cc6f755f07 | ||

|

|

4bd1790a52 | ||

|

|

2505e01fce | ||

|

|

5ff2d9e9e7 | ||

|

|

ff47f0978f | ||

|

|

67125542a9 | ||

|

|

6bd8bf7d9f | ||

|

|

c7399bc8e7 | ||

|

|

5f796a982b | ||

|

|

416d943d14 |

1

.gitattributes

vendored

Normal file

1

.gitattributes

vendored

Normal file

@@ -0,0 +1 @@

|

||||

*.css linguist-detectable=false

|

||||

9

.gitignore

vendored

9

.gitignore

vendored

@@ -1,3 +1,4 @@

|

||||

deploy/docker/environment_tiny/common_test

|

||||

frontend/node_modules

|

||||

frontend/.pnp

|

||||

*.pnp.js

|

||||

@@ -19,7 +20,13 @@ frontend/.yarnclean

|

||||

frontend/npm-debug.log*

|

||||

frontend/yarn-debug.log*

|

||||

frontend/yarn-error.log*

|

||||

frontend/src/constants/env.ts

|

||||

|

||||

.idea

|

||||

|

||||

**/.vscode

|

||||

*.tgz

|

||||

**/build

|

||||

**/build

|

||||

**/storage

|

||||

**/locust-scripts/__pycache__/

|

||||

|

||||

|

||||

76

CODE_OF_CONDUCT.md

Normal file

76

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,76 @@

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as

|

||||

contributors and maintainers pledge to making participation in our project and

|

||||

our community a harassment-free experience for everyone, regardless of age, body

|

||||

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

||||

level of experience, education, socio-economic status, nationality, personal

|

||||

appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment

|

||||

include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

||||

advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic

|

||||

address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable

|

||||

behavior and are expected to take appropriate and fair corrective action in

|

||||

response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or

|

||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

||||

permanently any contributor for other behaviors that they deem inappropriate,

|

||||

threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies both within project spaces and in public spaces

|

||||

when an individual is representing the project or its community. Examples of

|

||||

representing a project or community include using an official project e-mail

|

||||

address, posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event. Representation of a project may be

|

||||

further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at dev@signoz.io. All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

||||

faith may face temporary or permanent repercussions as determined by other

|

||||

members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see

|

||||

https://www.contributor-covenant.org/faq

|

||||

53

README.md

53

README.md

@@ -1,34 +1,49 @@

|

||||

<p align="center"><img src="https://signoz.io/img/SigNozLogo-200x200.svg" alt="SigNoz Logo" width="100"></p>

|

||||

<p align="center">

|

||||

<img src="https://res.cloudinary.com/dcv3epinx/image/upload/v1618904450/signoz-images/LogoGithub_sigfbu.svg" alt="SigNoz-logo" width="240" />

|

||||

|

||||

# SigNoz

|

||||

SigNoz is an opensource observability platform. SigNoz uses distributed tracing to gain visibility into your systems and powers data using [Kafka](https://kafka.apache.org/) (to handle high ingestion rate and backpressure) and [Apache Druid](https://druid.apache.org/) (Apache Druid is a high performance real-time analytics database), both proven in industry to handle scale.

|

||||

<p align="center">Monitor your applications and troubleshoot problems in your deployed applications, an open-source alternative to DataDog, New Relic, etc.</p>

|

||||

</p>

|

||||

|

||||

[](LICENSE)

|

||||

|

||||

|

||||

##

|

||||

|

||||

SigNoz is an opensource observability platform. SigNoz uses distributed tracing to gain visibility into your systems and powers data using [Kafka](https://kafka.apache.org/) (to handle high ingestion rate and backpressure) and [Apache Druid](https://druid.apache.org/) (Apache Druid is a high performance real-time analytics database), both proven in the industry to handle scale.

|

||||

|

||||

<!--  -->

|

||||

|

||||

|

||||

|

||||

### Features:

|

||||

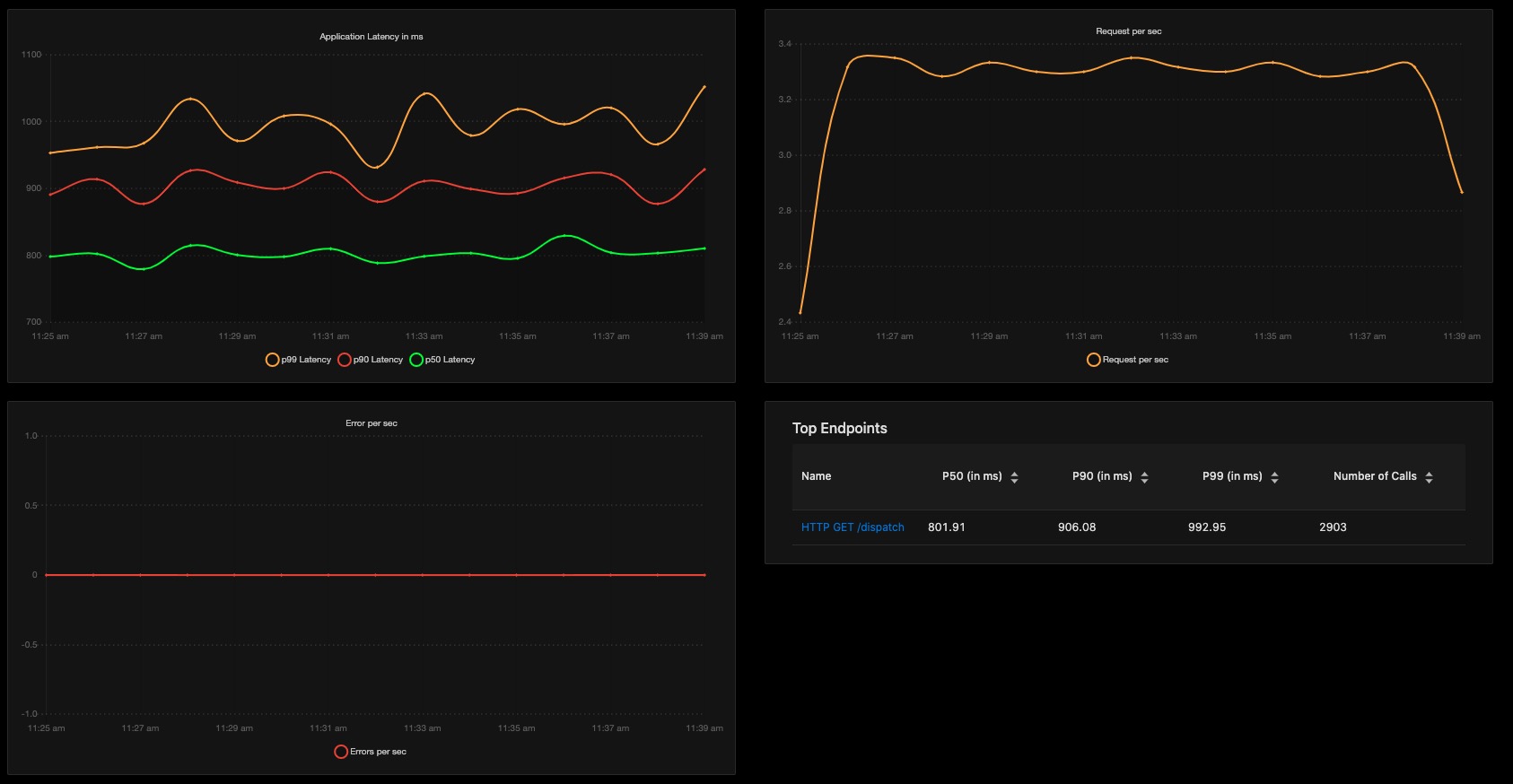

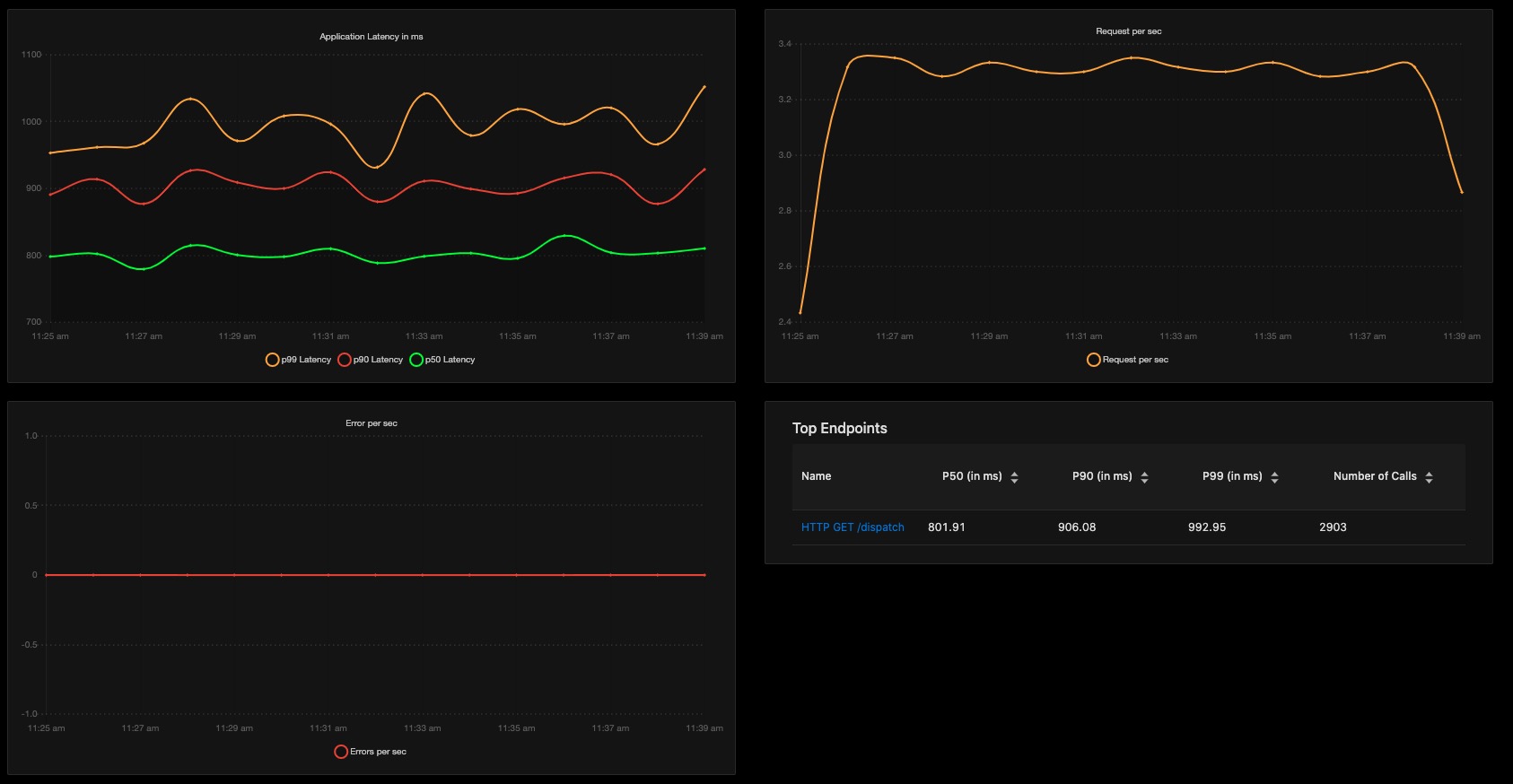

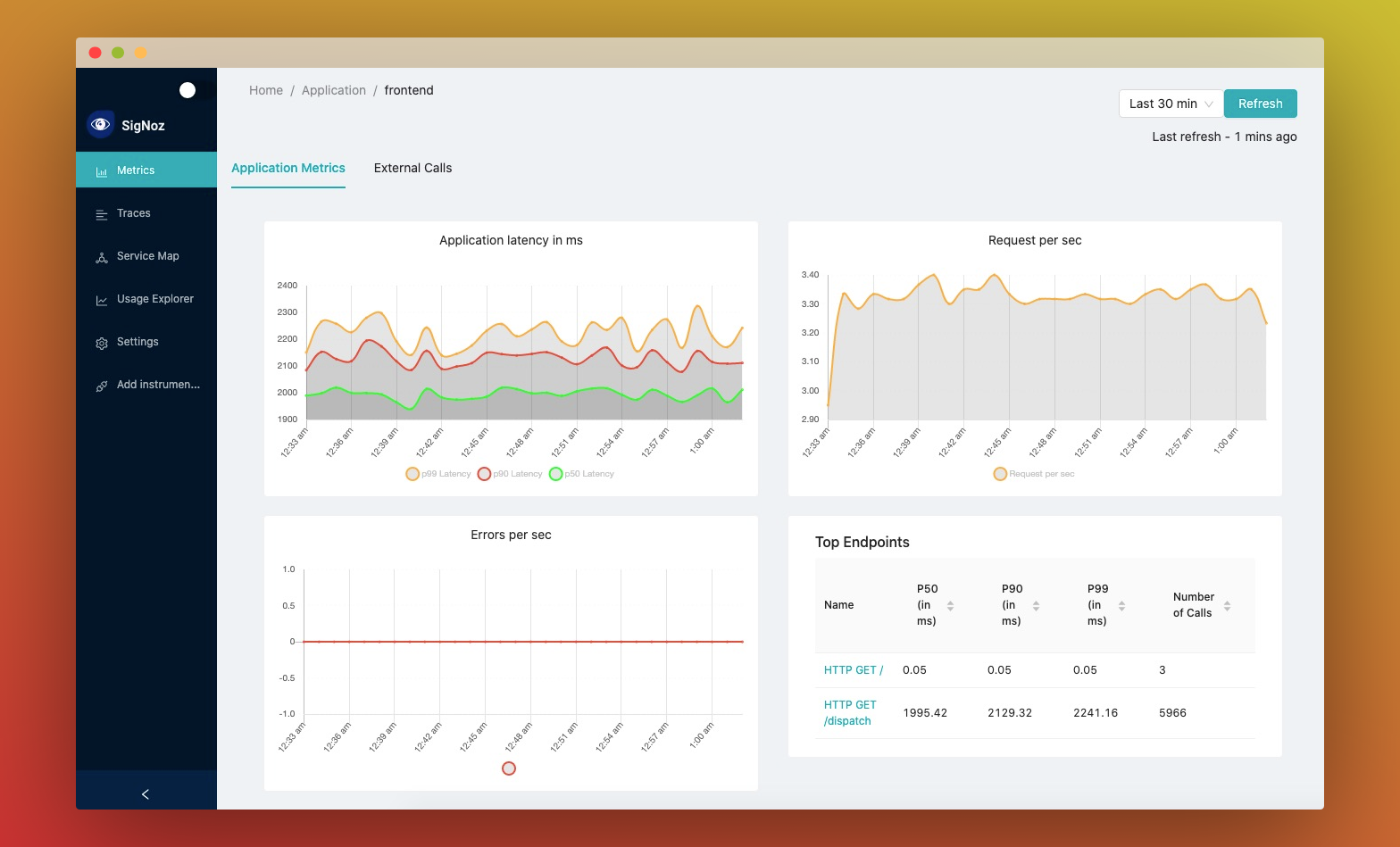

- Application overview metrics like RPS, 50th/90th/99th Percentile latencies and Error Rate

|

||||

|

||||

- Application overview metrics like RPS, 50th/90th/99th Percentile latencies, and Error Rate

|

||||

- Slowest endpoints in your application

|

||||

- See exact request trace to figure out issues in downstream services, slow DB queries, call to 3rd party services like payment gateways, etc

|

||||

- Filter traces by service name, operation, latency, error, tags/annotations.

|

||||

- Filter traces by service name, operation, latency, error, tags/annotations.

|

||||

- Aggregate metrics on filtered traces. Eg, you can get error rate and 99th percentile latency of `customer_type: gold` or `deployment_version: v2` or `external_call: paypal`

|

||||

- Unified UI for metrics and traces. No need to switch from Prometheus to Jaeger to debug issues.

|

||||

- In-built workflows to reduce your efforts in detecting common issues like new deployment failures, 3rd party slow APIs, etc (Coming Soon)

|

||||

- Anomaly Detection Framework (Coming Soon)

|

||||

|

||||

|

||||

### Motivation:

|

||||

- SaaS vendors charge insane amount to provide Application Monitoring. They often surprise you by huge month end bills without any tranparency of data sent to them.

|

||||

|

||||

- SaaS vendors charge an insane amount to provide Application Monitoring. They often surprise you with huge month end bills without any transparency of data sent to them.

|

||||

- Data privacy and compliance demands data to not leave the network boundary

|

||||

- No more magic happening in agents installed in your infra. You take control of sampling, uptime, configuration. Also, you can build modules over SigNoz to extend business specific capabilities.

|

||||

|

||||

|

||||

- Highly scalable architecture

|

||||

- No more magic happening in agents installed in your infra. You take control of sampling, uptime, configuration.

|

||||

- Build modules over SigNoz to extend business specific capabilities

|

||||

|

||||

# Getting Started

|

||||

|

||||

Deploy in Kubernetes using Helm. Below steps will install the SigNoz in platform namespace inside you k8s cluster.

|

||||

## Deploy using docker-compose

|

||||

|

||||

We have a tiny-cluster setup and a standard setup to deploy using docker-compose.

|

||||

Follow the steps listed at https://signoz.io/docs/deployment/docker/.

|

||||

The troubleshooting instructions at https://signoz.io/docs/deployment/docker/#troubleshooting may be helpful

|

||||

|

||||

## Deploy in Kubernetes using Helm.

|

||||

|

||||

Below steps will install the SigNoz in platform namespace inside your k8s cluster.

|

||||

|

||||

```console

|

||||

git clone https://github.com/SigNoz/signoz.git && cd signoz

|

||||

@@ -38,8 +53,8 @@ helm -n platform install signoz deploy/kubernetes/platform

|

||||

kubectl -n platform apply -Rf deploy/kubernetes/jobs

|

||||

kubectl -n platform apply -f deploy/kubernetes/otel-collector

|

||||

```

|

||||

|

||||

**You can choose a different namespace too. In that case, you need to point your applications to correct address to send traces. In our sample application just change the `JAEGER_ENDPOINT` environment variable in `sample-apps/hotrod/deployment.yaml`*

|

||||

|

||||

\*_You can choose a different namespace too. In that case, you need to point your applications to correct address to send traces. In our sample application just change the `JAEGER_ENDPOINT` environment variable in `sample-apps/hotrod/deployment.yaml`_

|

||||

|

||||

### Test HotROD application with SigNoz

|

||||

|

||||

@@ -53,17 +68,19 @@ kubectl -n sample-application apply -Rf sample-apps/hotrod/

|

||||

`kubectl -n sample-application run strzal --image=djbingham/curl --restart='OnFailure' -i --tty --rm --command -- curl -X POST -F 'locust_count=6' -F 'hatch_rate=2' http://locust-master:8089/swarm`

|

||||

|

||||

### See UI

|

||||

|

||||

`kubectl -n platform port-forward svc/signoz-frontend 3000:3000`

|

||||

|

||||

### How to stop load

|

||||

|

||||

`kubectl -n sample-application run strzal --image=djbingham/curl --restart='OnFailure' -i --tty --rm --command -- curl http://locust-master:8089/stop`

|

||||

|

||||

|

||||

# Documentation

|

||||

You can find docs at https://signoz.io/docs/installation. If you need any clarification or find something missing, feel free to raise a github issue with label `documentation` or reach out to us at community slack channel.

|

||||

|

||||

You can find docs at https://signoz.io/docs/deployment/docker. If you need any clarification or find something missing, feel free to raise a GitHub issue with the label `documentation` or reach out to us at the community slack channel.

|

||||

|

||||

# Community

|

||||

Join the [slack community](https://app.slack.com/client/T01HWUTP0LT#/) to know more about distributed tracing, observability or SigNoz and to connect with other users and contributors.

|

||||

|

||||

If you have any ideas, questions or any feedback, please share on our [Github Discussions](https://github.com/SigNoz/signoz/discussions)

|

||||

Join the [slack community](https://app.slack.com/client/T01HWUTP0LT#/) to know more about distributed tracing, observability, or SigNoz and to connect with other users and contributors.

|

||||

|

||||

If you have any ideas, questions, or any feedback, please share on our [Github Discussions](https://github.com/SigNoz/signoz/discussions)

|

||||

|

||||

264

deploy/docker/docker-compose-tiny.yaml

Normal file

264

deploy/docker/docker-compose-tiny.yaml

Normal file

@@ -0,0 +1,264 @@

|

||||

version: "2.4"

|

||||

|

||||

volumes:

|

||||

metadata_data: {}

|

||||

middle_var: {}

|

||||

historical_var: {}

|

||||

broker_var: {}

|

||||

coordinator_var: {}

|

||||

router_var: {}

|

||||

|

||||

# If able to connect to kafka but not able to write to topic otlp_spans look into below link

|

||||

# https://github.com/wurstmeister/kafka-docker/issues/409#issuecomment-428346707

|

||||

|

||||

services:

|

||||

|

||||

zookeeper:

|

||||

image: bitnami/zookeeper:3.6.2-debian-10-r100

|

||||

ports:

|

||||

- "2181:2181"

|

||||

environment:

|

||||

- ALLOW_ANONYMOUS_LOGIN=yes

|

||||

|

||||

|

||||

kafka:

|

||||

# image: wurstmeister/kafka

|

||||

image: bitnami/kafka:2.7.0-debian-10-r1

|

||||

ports:

|

||||

- "9092:9092"

|

||||

hostname: kafka

|

||||

environment:

|

||||

KAFKA_ADVERTISED_HOST_NAME: kafka

|

||||

KAFKA_ADVERTISED_PORT: 9092

|

||||

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

|

||||

ALLOW_PLAINTEXT_LISTENER: 'yes'

|

||||

KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE: 'true'

|

||||

KAFKA_TOPICS: 'otlp_spans:1:1,flattened_spans:1:1'

|

||||

|

||||

healthcheck:

|

||||

# test: ["CMD", "kafka-topics.sh", "--create", "--topic", "otlp_spans", "--zookeeper", "zookeeper:2181"]

|

||||

test: ["CMD", "kafka-topics.sh", "--list", "--zookeeper", "zookeeper:2181"]

|

||||

interval: 30s

|

||||

timeout: 10s

|

||||

retries: 10

|

||||

depends_on:

|

||||

- zookeeper

|

||||

|

||||

postgres:

|

||||

container_name: postgres

|

||||

image: postgres:latest

|

||||

volumes:

|

||||

- metadata_data:/var/lib/postgresql/data

|

||||

environment:

|

||||

- POSTGRES_PASSWORD=FoolishPassword

|

||||

- POSTGRES_USER=druid

|

||||

- POSTGRES_DB=druid

|

||||

|

||||

coordinator:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: coordinator

|

||||

volumes:

|

||||

- ./storage:/opt/data

|

||||

- coordinator_var:/opt/druid/var

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

ports:

|

||||

- "8081:8081"

|

||||

command:

|

||||

- coordinator

|

||||

env_file:

|

||||

- environment_tiny/coordinator

|

||||

- environment_tiny/common

|

||||

|

||||

broker:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: broker

|

||||

volumes:

|

||||

- broker_var:/opt/druid/var

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8082:8082"

|

||||

command:

|

||||

- broker

|

||||

env_file:

|

||||

- environment_tiny/broker

|

||||

- environment_tiny/common

|

||||

|

||||

historical:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: historical

|

||||

volumes:

|

||||

- ./storage:/opt/data

|

||||

- historical_var:/opt/druid/var

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8083:8083"

|

||||

command:

|

||||

- historical

|

||||

env_file:

|

||||

- environment_tiny/historical

|

||||

- environment_tiny/common

|

||||

|

||||

middlemanager:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: middlemanager

|

||||

volumes:

|

||||

- ./storage:/opt/data

|

||||

- middle_var:/opt/druid/var

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8091:8091"

|

||||

command:

|

||||

- middleManager

|

||||

env_file:

|

||||

- environment_tiny/middlemanager

|

||||

- environment_tiny/common

|

||||

|

||||

router:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: router

|

||||

volumes:

|

||||

- router_var:/opt/druid/var

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8888:8888"

|

||||

command:

|

||||

- router

|

||||

env_file:

|

||||

- environment_tiny/router

|

||||

- environment_tiny/common

|

||||

|

||||

flatten-processor:

|

||||

image: signoz/flattener-processor:0.2.0

|

||||

container_name: flattener-processor

|

||||

|

||||

depends_on:

|

||||

- kafka

|

||||

- otel-collector

|

||||

ports:

|

||||

- "8000:8000"

|

||||

|

||||

environment:

|

||||

- KAFKA_BROKER=kafka:9092

|

||||

- KAFKA_INPUT_TOPIC=otlp_spans

|

||||

- KAFKA_OUTPUT_TOPIC=flattened_spans

|

||||

|

||||

|

||||

query-service:

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.2.0

|

||||

container_name: query-service

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

ports:

|

||||

- "8080:8080"

|

||||

|

||||

environment:

|

||||

- DruidClientUrl=http://router:8888

|

||||

- DruidDatasource=flattened_spans

|

||||

- POSTHOG_API_KEY=H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w

|

||||

|

||||

|

||||

frontend:

|

||||

image: signoz/frontend:0.2.1

|

||||

container_name: frontend

|

||||

|

||||

depends_on:

|

||||

- query-service

|

||||

links:

|

||||

- "query-service"

|

||||

ports:

|

||||

- "3000:3000"

|

||||

volumes:

|

||||

- ./nginx-config.conf:/etc/nginx/conf.d/default.conf

|

||||

|

||||

create-supervisor:

|

||||

image: theithollow/hollowapp-blog:curl

|

||||

container_name: create-supervisor

|

||||

command:

|

||||

- /bin/sh

|

||||

- -c

|

||||

- "curl -X POST -H 'Content-Type: application/json' -d @/app/supervisor-spec.json http://router:8888/druid/indexer/v1/supervisor"

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

restart: on-failure:6

|

||||

|

||||

volumes:

|

||||

- ./druid-jobs/supervisor-spec.json:/app/supervisor-spec.json

|

||||

|

||||

|

||||

set-retention:

|

||||

image: theithollow/hollowapp-blog:curl

|

||||

container_name: set-retention

|

||||

command:

|

||||

- /bin/sh

|

||||

- -c

|

||||

- "curl -X POST -H 'Content-Type: application/json' -d @/app/retention-spec.json http://router:8888/druid/coordinator/v1/rules/flattened_spans"

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

restart: on-failure:6

|

||||

volumes:

|

||||

- ./druid-jobs/retention-spec.json:/app/retention-spec.json

|

||||

|

||||

otel-collector:

|

||||

image: otel/opentelemetry-collector:0.18.0

|

||||

command: ["--config=/etc/otel-collector-config.yaml", "--mem-ballast-size-mib=683"]

|

||||

volumes:

|

||||

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

|

||||

ports:

|

||||

- "1777:1777" # pprof extension

|

||||

- "8887:8888" # Prometheus metrics exposed by the agent

|

||||

- "14268:14268" # Jaeger receiver

|

||||

- "55678" # OpenCensus receiver

|

||||

- "55680:55680" # OTLP HTTP/2.0 legacy port

|

||||

- "55681:55681" # OTLP HTTP/1.0 receiver

|

||||

- "4317:4317" # OTLP GRPC receiver

|

||||

- "55679:55679" # zpages extension

|

||||

- "13133" # health_check

|

||||

depends_on:

|

||||

kafka:

|

||||

condition: service_healthy

|

||||

|

||||

|

||||

hotrod:

|

||||

image: jaegertracing/example-hotrod:latest

|

||||

container_name: hotrod

|

||||

ports:

|

||||

- "9000:8080"

|

||||

command: ["all"]

|

||||

environment:

|

||||

- JAEGER_ENDPOINT=http://otel-collector:14268/api/traces

|

||||

|

||||

|

||||

load-hotrod:

|

||||

image: "grubykarol/locust:1.2.3-python3.9-alpine3.12"

|

||||

container_name: load-hotrod

|

||||

hostname: load-hotrod

|

||||

ports:

|

||||

- "8089:8089"

|

||||

environment:

|

||||

ATTACKED_HOST: http://hotrod:8080

|

||||

LOCUST_MODE: standalone

|

||||

NO_PROXY: standalone

|

||||

TASK_DELAY_FROM: 5

|

||||

TASK_DELAY_TO: 30

|

||||

QUIET_MODE: "${QUIET_MODE:-false}"

|

||||

LOCUST_OPTS: "--headless -u 10 -r 1"

|

||||

volumes:

|

||||

- ./locust-scripts:/locust

|

||||

|

||||

259

deploy/docker/docker-compose.yaml

Normal file

259

deploy/docker/docker-compose.yaml

Normal file

@@ -0,0 +1,259 @@

|

||||

version: "2.4"

|

||||

|

||||

volumes:

|

||||

metadata_data: {}

|

||||

middle_var: {}

|

||||

historical_var: {}

|

||||

broker_var: {}

|

||||

coordinator_var: {}

|

||||

router_var: {}

|

||||

|

||||

# If able to connect to kafka but not able to write to topic otlp_spans look into below link

|

||||

# https://github.com/wurstmeister/kafka-docker/issues/409#issuecomment-428346707

|

||||

|

||||

services:

|

||||

|

||||

zookeeper:

|

||||

image: bitnami/zookeeper:3.6.2-debian-10-r100

|

||||

ports:

|

||||

- "2181:2181"

|

||||

environment:

|

||||

- ALLOW_ANONYMOUS_LOGIN=yes

|

||||

|

||||

|

||||

kafka:

|

||||

# image: wurstmeister/kafka

|

||||

image: bitnami/kafka:2.7.0-debian-10-r1

|

||||

ports:

|

||||

- "9092:9092"

|

||||

hostname: kafka

|

||||

environment:

|

||||

KAFKA_ADVERTISED_HOST_NAME: kafka

|

||||

KAFKA_ADVERTISED_PORT: 9092

|

||||

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

|

||||

ALLOW_PLAINTEXT_LISTENER: 'yes'

|

||||

KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE: 'true'

|

||||

KAFKA_TOPICS: 'otlp_spans:1:1,flattened_spans:1:1'

|

||||

|

||||

healthcheck:

|

||||

# test: ["CMD", "kafka-topics.sh", "--create", "--topic", "otlp_spans", "--zookeeper", "zookeeper:2181"]

|

||||

test: ["CMD", "kafka-topics.sh", "--list", "--zookeeper", "zookeeper:2181"]

|

||||

interval: 30s

|

||||

timeout: 10s

|

||||

retries: 10

|

||||

depends_on:

|

||||

- zookeeper

|

||||

|

||||

postgres:

|

||||

container_name: postgres

|

||||

image: postgres:latest

|

||||

volumes:

|

||||

- metadata_data:/var/lib/postgresql/data

|

||||

environment:

|

||||

- POSTGRES_PASSWORD=FoolishPassword

|

||||

- POSTGRES_USER=druid

|

||||

- POSTGRES_DB=druid

|

||||

|

||||

coordinator:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: coordinator

|

||||

volumes:

|

||||

- ./storage:/opt/druid/deepStorage

|

||||

- coordinator_var:/opt/druid/data

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

ports:

|

||||

- "8081:8081"

|

||||

command:

|

||||

- coordinator

|

||||

env_file:

|

||||

- environment_small/coordinator

|

||||

|

||||

broker:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: broker

|

||||

volumes:

|

||||

- broker_var:/opt/druid/data

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8082:8082"

|

||||

command:

|

||||

- broker

|

||||

env_file:

|

||||

- environment_small/broker

|

||||

|

||||

historical:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: historical

|

||||

volumes:

|

||||

- ./storage:/opt/druid/deepStorage

|

||||

- historical_var:/opt/druid/data

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8083:8083"

|

||||

command:

|

||||

- historical

|

||||

env_file:

|

||||

- environment_small/historical

|

||||

|

||||

middlemanager:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: middlemanager

|

||||

volumes:

|

||||

- ./storage:/opt/druid/deepStorage

|

||||

- middle_var:/opt/druid/data

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8091:8091"

|

||||

command:

|

||||

- middleManager

|

||||

env_file:

|

||||

- environment_small/middlemanager

|

||||

|

||||

router:

|

||||

image: apache/druid:0.20.0

|

||||

container_name: router

|

||||

volumes:

|

||||

- router_var:/opt/druid/data

|

||||

depends_on:

|

||||

- zookeeper

|

||||

- postgres

|

||||

- coordinator

|

||||

ports:

|

||||

- "8888:8888"

|

||||

command:

|

||||

- router

|

||||

env_file:

|

||||

- environment_small/router

|

||||

|

||||

flatten-processor:

|

||||

image: signoz/flattener-processor:0.2.0

|

||||

container_name: flattener-processor

|

||||

|

||||

depends_on:

|

||||

- kafka

|

||||

- otel-collector

|

||||

ports:

|

||||

- "8000:8000"

|

||||

|

||||

environment:

|

||||

- KAFKA_BROKER=kafka:9092

|

||||

- KAFKA_INPUT_TOPIC=otlp_spans

|

||||

- KAFKA_OUTPUT_TOPIC=flattened_spans

|

||||

|

||||

|

||||

query-service:

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.2.0

|

||||

container_name: query-service

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

ports:

|

||||

- "8080:8080"

|

||||

|

||||

environment:

|

||||

- DruidClientUrl=http://router:8888

|

||||

- DruidDatasource=flattened_spans

|

||||

- POSTHOG_API_KEY=H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w

|

||||

|

||||

|

||||

frontend:

|

||||

image: signoz/frontend:0.2.1

|

||||

container_name: frontend

|

||||

|

||||

depends_on:

|

||||

- query-service

|

||||

links:

|

||||

- "query-service"

|

||||

ports:

|

||||

- "3000:3000"

|

||||

volumes:

|

||||

- ./nginx-config.conf:/etc/nginx/conf.d/default.conf

|

||||

|

||||

create-supervisor:

|

||||

image: theithollow/hollowapp-blog:curl

|

||||

container_name: create-supervisor

|

||||

command:

|

||||

- /bin/sh

|

||||

- -c

|

||||

- "curl -X POST -H 'Content-Type: application/json' -d @/app/supervisor-spec.json http://router:8888/druid/indexer/v1/supervisor"

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

restart: on-failure:6

|

||||

|

||||

volumes:

|

||||

- ./druid-jobs/supervisor-spec.json:/app/supervisor-spec.json

|

||||

|

||||

|

||||

set-retention:

|

||||

image: theithollow/hollowapp-blog:curl

|

||||

container_name: set-retention

|

||||

command:

|

||||

- /bin/sh

|

||||

- -c

|

||||

- "curl -X POST -H 'Content-Type: application/json' -d @/app/retention-spec.json http://router:8888/druid/coordinator/v1/rules/flattened_spans"

|

||||

|

||||

depends_on:

|

||||

- router

|

||||

restart: on-failure:6

|

||||

volumes:

|

||||

- ./druid-jobs/retention-spec.json:/app/retention-spec.json

|

||||

|

||||

otel-collector:

|

||||

image: otel/opentelemetry-collector:0.18.0

|

||||

command: ["--config=/etc/otel-collector-config.yaml", "--mem-ballast-size-mib=683"]

|

||||

volumes:

|

||||

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

|

||||

ports:

|

||||

- "1777:1777" # pprof extension

|

||||

- "8887:8888" # Prometheus metrics exposed by the agent

|

||||

- "14268:14268" # Jaeger receiver

|

||||

- "55678" # OpenCensus receiver

|

||||

- "55680:55680" # OTLP HTTP/2.0 leagcy grpc receiver

|

||||

- "55681:55681" # OTLP HTTP/1.0 receiver

|

||||

- "4317:4317" # OTLP GRPC receiver

|

||||

- "55679:55679" # zpages extension

|

||||

- "13133" # health_check

|

||||

depends_on:

|

||||

kafka:

|

||||

condition: service_healthy

|

||||

|

||||

|

||||

hotrod:

|

||||

image: jaegertracing/example-hotrod:latest

|

||||

container_name: hotrod

|

||||

ports:

|

||||

- "9000:8080"

|

||||

command: ["all"]

|

||||

environment:

|

||||

- JAEGER_ENDPOINT=http://otel-collector:14268/api/traces

|

||||

|

||||

|

||||

load-hotrod:

|

||||

image: "grubykarol/locust:1.2.3-python3.9-alpine3.12"

|

||||

container_name: load-hotrod

|

||||

hostname: load-hotrod

|

||||

ports:

|

||||

- "8089:8089"

|

||||

environment:

|

||||

ATTACKED_HOST: http://hotrod:8080

|

||||

LOCUST_MODE: standalone

|

||||

NO_PROXY: standalone

|

||||

TASK_DELAY_FROM: 5

|

||||

TASK_DELAY_TO: 30

|

||||

QUIET_MODE: "${QUIET_MODE:-false}"

|

||||

LOCUST_OPTS: "--headless -u 10 -r 1"

|

||||

volumes:

|

||||

- ./locust-scripts:/locust

|

||||

|

||||

1

deploy/docker/druid-jobs/retention-spec.json

Normal file

1

deploy/docker/druid-jobs/retention-spec.json

Normal file

@@ -0,0 +1 @@

|

||||

[{"period":"P3D","includeFuture":true,"tieredReplicants":{"_default_tier":1},"type":"loadByPeriod"},{"type":"dropForever"}]

|

||||

69

deploy/docker/druid-jobs/supervisor-spec.json

Normal file

69

deploy/docker/druid-jobs/supervisor-spec.json

Normal file

@@ -0,0 +1,69 @@

|

||||

{

|

||||

"type": "kafka",

|

||||

"dataSchema": {

|

||||

"dataSource": "flattened_spans",

|

||||

"parser": {

|

||||

"type": "string",

|

||||

"parseSpec": {

|

||||

"format": "json",

|

||||

"timestampSpec": {

|

||||

"column": "StartTimeUnixNano",

|

||||

"format": "nano"

|

||||

},

|

||||

"dimensionsSpec": {

|

||||

"dimensions": [

|

||||

"TraceId",

|

||||

"SpanId",

|

||||

"ParentSpanId",

|

||||

"Name",

|

||||

"ServiceName",

|

||||

"References",

|

||||

"Tags",

|

||||

"ExternalHttpMethod",

|

||||

"ExternalHttpUrl",

|

||||

"Component",

|

||||

"DBSystem",

|

||||

"DBName",

|

||||

"DBOperation",

|

||||

"PeerService",

|

||||

{

|

||||

"type": "string",

|

||||

"name": "TagsKeys",

|

||||

"multiValueHandling": "ARRAY"

|

||||

},

|

||||

{

|

||||

"type": "string",

|

||||

"name": "TagsValues",

|

||||

"multiValueHandling": "ARRAY"

|

||||

},

|

||||

{ "name": "DurationNano", "type": "Long" },

|

||||

{ "name": "Kind", "type": "int" },

|

||||

{ "name": "StatusCode", "type": "int" }

|

||||

]

|

||||

}

|

||||

}

|

||||

},

|

||||

"metricsSpec" : [

|

||||

{ "type": "quantilesDoublesSketch", "name": "QuantileDuration", "fieldName": "DurationNano" }

|

||||

],

|

||||

"granularitySpec": {

|

||||

"type": "uniform",

|

||||

"segmentGranularity": "DAY",

|

||||

"queryGranularity": "NONE",

|

||||

"rollup": false

|

||||

}

|

||||

},

|

||||

"tuningConfig": {

|

||||

"type": "kafka",

|

||||

"reportParseExceptions": true

|

||||

},

|

||||

"ioConfig": {

|

||||

"topic": "flattened_spans",

|

||||

"replicas": 1,

|

||||

"taskDuration": "PT20M",

|

||||

"completionTimeout": "PT30M",

|

||||

"consumerProperties": {

|

||||

"bootstrap.servers": "kafka:9092"

|

||||

}

|

||||

}

|

||||

}

|

||||

53

deploy/docker/environment_small/broker

Normal file

53

deploy/docker/environment_small/broker

Normal file

@@ -0,0 +1,53 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=512m

|

||||

DRUID_XMS=512m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=768m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms512m", "-Xmx512m", "-XX:MaxDirectMemorySize=768m", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

druid_processing_buffer_sizeBytes=100MiB

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/druid/deepStorage

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/druid/data/indexing-logs

|

||||

|

||||

druid_processing_numThreads=1

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

52

deploy/docker/environment_small/coordinator

Normal file

52

deploy/docker/environment_small/coordinator

Normal file

@@ -0,0 +1,52 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=64m

|

||||

DRUID_XMS=64m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=400m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms64m", "-Xmx64m", "-XX:MaxDirectMemorySize=400m", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/druid/deepStorage

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/druid/data/indexing-logs

|

||||

|

||||

druid_processing_numThreads=1

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

53

deploy/docker/environment_small/historical

Normal file

53

deploy/docker/environment_small/historical

Normal file

@@ -0,0 +1,53 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=512m

|

||||

DRUID_XMS=512m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=1280m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms512m", "-Xmx512m", "-XX:MaxDirectMemorySize=1280m", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

druid_processing_buffer_sizeBytes=200MiB

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/druid/deepStorage

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/druid/data/indexing-logs

|

||||

|

||||

druid_processing_numThreads=2

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

53

deploy/docker/environment_small/middlemanager

Normal file

53

deploy/docker/environment_small/middlemanager

Normal file

@@ -0,0 +1,53 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=1g

|

||||

DRUID_XMS=1g

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=2g

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms1g", "-Xmx1g", "-XX:MaxDirectMemorySize=2g", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

druid_processing_buffer_sizeBytes=200MiB

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/druid/deepStorage

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/druid/data/indexing-logs

|

||||

|

||||

druid_processing_numThreads=2

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

52

deploy/docker/environment_small/router

Normal file

52

deploy/docker/environment_small/router

Normal file

@@ -0,0 +1,52 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=128m

|

||||

DRUID_XMS=128m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=128m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms128m", "-Xmx128m", "-XX:MaxDirectMemorySize=128m", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/druid/deepStorage

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/druid/data/indexing-logs

|

||||

|

||||

druid_processing_numThreads=1

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

52

deploy/docker/environment_tiny/broker

Normal file

52

deploy/docker/environment_tiny/broker

Normal file

@@ -0,0 +1,52 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=512m

|

||||

DRUID_XMS=512m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=400m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

# druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

|

||||

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||

|

||||

druid_indexer_runner_javaOptsArray=["-server", "-Xms512m", "-Xmx512m", "-XX:MaxDirectMemorySize=400m", "-Duser.timezone=UTC", "-Dfile.encoding=UTF-8", "-Djava.util.logging.manager=org.apache.logging.log4j.jul.LogManager"]

|

||||

druid_indexer_fork_property_druid_processing_buffer_sizeBytes=25000000

|

||||

druid_processing_buffer_sizeBytes=50MiB

|

||||

|

||||

|

||||

druid_processing_numThreads=1

|

||||

druid_processing_numMergeBuffers=2

|

||||

|

||||

DRUID_LOG4J=<?xml version="1.0" encoding="UTF-8" ?><Configuration status="WARN"><Appenders><Console name="Console" target="SYSTEM_OUT"><PatternLayout pattern="%d{ISO8601} %p [%t] %c - %m%n"/></Console></Appenders><Loggers><Root level="info"><AppenderRef ref="Console"/></Root><Logger name="org.apache.druid.jetty.RequestLog" additivity="false" level="DEBUG"><AppenderRef ref="Console"/></Logger></Loggers></Configuration>

|

||||

26

deploy/docker/environment_tiny/common

Normal file

26

deploy/docker/environment_tiny/common

Normal file

@@ -0,0 +1,26 @@

|

||||

# For S3 storage

|

||||

|

||||

# druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service", "druid-s3-extensions"]

|

||||

|

||||

|

||||

# druid_storage_type=s3

|

||||

# druid_storage_bucket=<s3-bucket-name>

|

||||

# druid_storage_baseKey=druid/segments

|

||||

|

||||

# AWS_ACCESS_KEY_ID=<s3-access-id>

|

||||

# AWS_SECRET_ACCESS_KEY=<s3-access-key>

|

||||

# AWS_REGION=<s3-aws-region>

|

||||

|

||||

# druid_indexer_logs_type=s3

|

||||

# druid_indexer_logs_s3Bucket=<s3-bucket-name>

|

||||

# druid_indexer_logs_s3Prefix=druid/indexing-logs

|

||||

|

||||

# -----------------------------------------------------------

|

||||

# For local storage

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

druid_storage_type=local

|

||||

druid_storage_storageDirectory=/opt/data/segments

|

||||

druid_indexer_logs_type=file

|

||||

druid_indexer_logs_directory=/opt/data/indexing-logs

|

||||

|

||||

49

deploy/docker/environment_tiny/coordinator

Normal file

49

deploy/docker/environment_tiny/coordinator

Normal file

@@ -0,0 +1,49 @@

|

||||

#

|

||||

# Licensed to the Apache Software Foundation (ASF) under one

|

||||

# or more contributor license agreements. See the NOTICE file

|

||||

# distributed with this work for additional information

|

||||

# regarding copyright ownership. The ASF licenses this file

|

||||

# to you under the Apache License, Version 2.0 (the

|

||||

# "License"); you may not use this file except in compliance

|

||||

# with the License. You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing,

|

||||

# software distributed under the License is distributed on an

|

||||

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

||||

# KIND, either express or implied. See the License for the

|

||||

# specific language governing permissions and limitations

|

||||

# under the License.

|

||||

#

|

||||

|

||||

# Java tuning

|

||||

DRUID_XMX=64m

|

||||

DRUID_XMS=64m

|

||||

DRUID_MAXNEWSIZE=256m

|

||||

DRUID_NEWSIZE=256m

|

||||

DRUID_MAXDIRECTMEMORYSIZE=400m

|

||||

|

||||

druid_emitter_logging_logLevel=debug

|

||||

|

||||

# druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

|

||||

druid_zk_service_host=zookeeper

|

||||

|

||||

druid_metadata_storage_host=

|

||||

druid_metadata_storage_type=postgresql

|

||||

druid_metadata_storage_connector_connectURI=jdbc:postgresql://postgres:5432/druid

|

||||

druid_metadata_storage_connector_user=druid

|

||||

druid_metadata_storage_connector_password=FoolishPassword

|

||||

|

||||

druid_coordinator_balancer_strategy=cachingCost

|

||||