mirror of

https://github.com/SigNoz/signoz.git

synced 2025-12-28 22:39:57 +00:00

Compare commits

170 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

2e71230bbf | ||

|

|

bf2002d6a2 | ||

|

|

59749d0576 | ||

|

|

c9c6ccc687 | ||

|

|

88082c1278 | ||

|

|

84f150bc18 | ||

|

|

299e80ca49 | ||

|

|

7127dec6f6 | ||

|

|

6afb91fa84 | ||

|

|

72f5688194 | ||

|

|

a118c3c8a1 | ||

|

|

9baf873521 | ||

|

|

12911db945 | ||

|

|

bd149f4364 | ||

|

|

c69b9ae62a | ||

|

|

bc3f16d3de | ||

|

|

61bbd5551b | ||

|

|

286577d13d | ||

|

|

dbd0701779 | ||

|

|

0c7a5ce3c7 | ||

|

|

a92381df1b | ||

|

|

eb1509d385 | ||

|

|

34e33af290 | ||

|

|

c0004cd51c | ||

|

|

10bf545c65 | ||

|

|

7d2bcf11c3 | ||

|

|

3ff7ace54e | ||

|

|

abdfe6ccc5 | ||

|

|

aa398263fb | ||

|

|

ace02486e0 | ||

|

|

b318ba6b2f | ||

|

|

de4be411f4 | ||

|

|

362f264bae | ||

|

|

e94d984cdb | ||

|

|

bf0267d579 | ||

|

|

e4b3ea1f34 | ||

|

|

4ee6d4b546 | ||

|

|

a7836c26d0 | ||

|

|

15eb5364d5 | ||

|

|

47bf512a33 | ||

|

|

2776bfa311 | ||

|

|

8c7ac88f84 | ||

|

|

a08ad9e2cf | ||

|

|

d312398f18 | ||

|

|

d891c3e118 | ||

|

|

1e7b68203f | ||

|

|

3d152e23cd | ||

|

|

47cf1eebf7 | ||

|

|

6c84882dca | ||

|

|

a4424eca0e | ||

|

|

77992a59bc | ||

|

|

3cbb071138 | ||

|

|

9cd6e5cabe | ||

|

|

13bec63fca | ||

|

|

f2164a1a86 | ||

|

|

8a4f58e77b | ||

|

|

51a24673b9 | ||

|

|

c94feb9af2 | ||

|

|

a8668d19a8 | ||

|

|

a8e81c9666 | ||

|

|

2eed75560d | ||

|

|

8d6fb7f897 | ||

|

|

4cd0088029 | ||

|

|

872c8adbbb | ||

|

|

bba7344bae | ||

|

|

51fe634566 | ||

|

|

af58d085a0 | ||

|

|

5b9b344816 | ||

|

|

1caa07e0af | ||

|

|

ae23cec8d6 | ||

|

|

5afc04f205 | ||

|

|

6aed23ce66 | ||

|

|

007e2e7b78 | ||

|

|

762a3cdfcd | ||

|

|

308f8f8fed | ||

|

|

588bf2b93a | ||

|

|

fff38b58d2 | ||

|

|

cbd2036613 | ||

|

|

7ef72d4147 | ||

|

|

07af5c843a | ||

|

|

e524ce5743 | ||

|

|

24e1346521 | ||

|

|

62e77613a6 | ||

|

|

56c0265660 | ||

|

|

91b1d08dff | ||

|

|

239c2cb859 | ||

|

|

4173258d0a | ||

|

|

1cbbdd8265 | ||

|

|

433f3f3d94 | ||

|

|

fed23a6ab9 | ||

|

|

b979c24cb4 | ||

|

|

e4b41b1a27 | ||

|

|

44495b7669 | ||

|

|

cc3133b2d6 | ||

|

|

9c83319143 | ||

|

|

571c08c58e | ||

|

|

092cfc7804 | ||

|

|

245050aac2 | ||

|

|

606fa6591d | ||

|

|

55f7f56acf | ||

|

|

e6b3a6c9db | ||

|

|

d6884cacdb | ||

|

|

bb155d2356 | ||

|

|

c49ffd83a3 | ||

|

|

8a5178f0dc | ||

|

|

057fba112b | ||

|

|

4c0b81b5c7 | ||

|

|

1d2f964a63 | ||

|

|

171fd714de | ||

|

|

789880fa07 | ||

|

|

f25edf1e29 | ||

|

|

c6e2e297d5 | ||

|

|

2bc01e50bd | ||

|

|

38770809e3 | ||

|

|

9dd9f1133b | ||

|

|

8b743f7803 | ||

|

|

868b7691b3 | ||

|

|

613e6ba5f9 | ||

|

|

8fe2fe5aec | ||

|

|

55a7b5b1b3 | ||

|

|

8b0abbec79 | ||

|

|

24416ceabd | ||

|

|

2482e91348 | ||

|

|

fcc248ddf6 | ||

|

|

3318ec8c38 | ||

|

|

a416767950 | ||

|

|

173bd01e70 | ||

|

|

de4adeded5 | ||

|

|

674fb34115 | ||

|

|

9c74f0bae5 | ||

|

|

2999adc98f | ||

|

|

be7d8c3347 | ||

|

|

41dd007380 | ||

|

|

83eb73ee03 | ||

|

|

5b2f985710 | ||

|

|

e9c03c4d85 | ||

|

|

d07e277220 | ||

|

|

9bcdb2ede6 | ||

|

|

4bbc4eef1a | ||

|

|

36ad8987dd | ||

|

|

45f1c2ec11 | ||

|

|

705279b6fd | ||

|

|

9ac2dece11 | ||

|

|

325ca434d4 | ||

|

|

128d75a144 | ||

|

|

45375fbd53 | ||

|

|

2d646c0655 | ||

|

|

6f12d06a32 | ||

|

|

bc02aa5eef | ||

|

|

c7ed2daf4a | ||

|

|

5e97dfa5fc | ||

|

|

44666a4944 | ||

|

|

14f6a23f51 | ||

|

|

050b57c72b | ||

|

|

0f891ccb26 | ||

|

|

b3755325ba | ||

|

|

a1468cf126 | ||

|

|

3014948f26 | ||

|

|

1e1fc38c96 | ||

|

|

dad678a4c1 | ||

|

|

f91d8685e3 | ||

|

|

50a2f3b6f9 | ||

|

|

97c7543557 | ||

|

|

e4c8dcf3ca | ||

|

|

5a6158a2e5 | ||

|

|

9936b3ab46 | ||

|

|

673d65db40 | ||

|

|

5e1592274c | ||

|

|

a50fd14ef2 | ||

|

|

baedfa62d2 |

33

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

33

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,33 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## Bug description

|

||||

|

||||

*Please describe.*

|

||||

*If this affects the front-end, screenshots would be of great help.*

|

||||

|

||||

## Expected behavior

|

||||

|

||||

|

||||

|

||||

## How to reproduce

|

||||

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

|

||||

## Version information

|

||||

* **Signoz version**:

|

||||

* **Browser version**:

|

||||

* **Your OS and version**:

|

||||

|

||||

## Additional context

|

||||

|

||||

|

||||

#### *Thank you* for your bug report – we love squashing them!

|

||||

27

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

27

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,27 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## Is your feature request related to a problem?

|

||||

|

||||

*Please describe.*

|

||||

|

||||

## Describe the solution you'd like

|

||||

|

||||

|

||||

|

||||

## Describe alternatives you've considered

|

||||

|

||||

|

||||

|

||||

## Additional context

|

||||

Add any other context or screenshots about the feature request here.

|

||||

|

||||

|

||||

|

||||

#### *Thank you* for your feature request – we love each and every one!

|

||||

33

.github/ISSUE_TEMPLATE/performance-issue-report.md

vendored

Normal file

33

.github/ISSUE_TEMPLATE/performance-issue-report.md

vendored

Normal file

@@ -0,0 +1,33 @@

|

||||

---

|

||||

name: Performance issue report

|

||||

about: Long response times, high resource usage? Ensuring that SigNoz is scalable

|

||||

is our top priority

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## In what situation are you experiencing subpar performance?

|

||||

|

||||

*Please describe.*

|

||||

|

||||

## How to reproduce

|

||||

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

|

||||

## Your Environment

|

||||

|

||||

- [ ] Linux

|

||||

- [ ] Mac

|

||||

- [ ] Windows

|

||||

|

||||

Please provide details of OS version etc.

|

||||

|

||||

## Additional context

|

||||

|

||||

|

||||

|

||||

#### *Thank you* for your performance issue report – we want SigNoz to be blazing fast!

|

||||

@@ -1,9 +1,64 @@

|

||||

# How to Contribute

|

||||

|

||||

You can always reach out to ankit@signoz.io to understand more about the repo and product. We are very responsive over email and [slack](https://signoz-community.slack.com/join/shared_invite/zt-kj26gm1u-Xe3CYxCu0bGXCrCqKipjOA#/).

|

||||

There are primarily 3 areas in which you can contribute in SigNoz

|

||||

|

||||

- Frontend ( written in Typescript, React)

|

||||

- Query Service (written in Go)

|

||||

- Flattener Processor (written in Go)

|

||||

|

||||

Depending upon your area of expertise & interest, you can chose one or more to contribute. Below are detailed instructions to contribute in each area

|

||||

|

||||

# Develop Frontend

|

||||

|

||||

Need to update [https://github.com/SigNoz/signoz/tree/main/frontend](https://github.com/SigNoz/signoz/tree/main/frontend)

|

||||

|

||||

### Contribute to Frontend with Docker installation of SigNoz

|

||||

|

||||

- `git clone [https://github.com/SigNoz/signoz.git](https://github.com/SigNoz/signoz.git) && cd signoz`

|

||||

- comment out frontend service section at `deploy/docker/clickhouse-setup/docker-compose.yaml#L38`

|

||||

- run `cd deploy && docker-compose -f docker/clickhouse-setup/docker-compose.yaml up -d` (this will install signoz locally without the frontend service)

|

||||

- `cd ../frontend` and change baseURL to `http://localhost:8080` in file `src/constants/env.ts`

|

||||

- `yarn install`

|

||||

- `yarn dev`

|

||||

|

||||

### Contribute to Frontend without installing SigNoz backend

|

||||

|

||||

If you don't want to install SigNoz backend just for doing frontend development, we can provide you with test environments which you can use as the backend. Please ping us in #contributing channel in our [slack community](https://join.slack.com/t/signoz-community/shared_invite/zt-lrjknbbp-J_mI13rlw8pGF4EWBnorJA) and we will DM you with `<test environment URL>`

|

||||

|

||||

- `git clone [https://github.com/SigNoz/signoz.git](https://github.com/SigNoz/signoz.git) && cd signoz/frontend`

|

||||

- change baseURL to `<test environment URL>` in file `src/constants/env.ts`

|

||||

- `yarn install`

|

||||

- `yarn dev`

|

||||

|

||||

**_Frontend should now be accessible at `http://localhost:3000/application`_**

|

||||

|

||||

# Contribute to Query-Service

|

||||

|

||||

Need to update [https://github.com/SigNoz/signoz/tree/main/pkg/query-service](https://github.com/SigNoz/signoz/tree/main/pkg/query-service)

|

||||

|

||||

### To run ClickHouse setup (recommended for local development)

|

||||

|

||||

- `git clone [https://github.com/SigNoz/signoz.git](https://github.com/SigNoz/signoz.git) && cd signoz/deploy`

|

||||

- comment out frontend service section at `docker/clickhouse-setup/docker-compose.yaml#L38`

|

||||

- comment out query-service section at `docker/clickhouse-setup/docker-compose.yaml#L22`

|

||||

- Run `docker-compose -f docker/clickhouse-setup/docker-compose.yaml up -d` (this will install signoz locally without the frontend and query-service)

|

||||

- `STORAGE=clickhouse ClickHouseUrl=tcp://localhost:9001 go run main.go`

|

||||

|

||||

**_Query Service should now be available at `http://localhost:8080`_**

|

||||

|

||||

> If you want to see how, frontend plays with query service, you can run frontend also in you local env with the baseURL changed to [`http://localhost:8080`](http://ec2-18-191-251-86.us-east-2.compute.amazonaws.com:8080/) in file `src/constants/env.ts` as the query-service is now running at port `8080`

|

||||

|

||||

# Contribute to Flattener Processor

|

||||

|

||||

Not needed to run for the ClickHouse setup

|

||||

|

||||

more info at [https://github.com/SigNoz/signoz/tree/main/pkg/processors/flattener](https://github.com/SigNoz/signoz/tree/main/pkg/processors/flattener)

|

||||

|

||||

## General Instructions

|

||||

|

||||

You can always reach out to `ankit@signoz.io` to understand more about the repo and product. We are very responsive over email and [slack](https://join.slack.com/t/signoz-community/shared_invite/zt-lrjknbbp-J_mI13rlw8pGF4EWBnorJA).

|

||||

|

||||

- You can create a PR (Pull Request)

|

||||

- If you find any bugs, please create an issue

|

||||

- If you find anything missing in documentation, you can create an issue with label **documentation**

|

||||

|

||||

#### If you want to build any new feature, please create an issue with label `enhancement`

|

||||

- If you want to build any new feature, please create an issue with label `enhancement`

|

||||

- If you want to discuss something about the product, start a new [discussion](https://github.com/SigNoz/signoz/discussions)

|

||||

|

||||

45

README.md

45

README.md

@@ -4,17 +4,27 @@

|

||||

<p align="center">Monitor your applications and troubleshoot problems in your deployed applications, an open-source alternative to DataDog, New Relic, etc.</p>

|

||||

</p>

|

||||

|

||||

[](LICENSE)

|

||||

<p align="center">

|

||||

<img alt="License" src="https://img.shields.io/badge/license-MIT-brightgreen"> </a>

|

||||

<img alt="Downloads" src="https://img.shields.io/docker/pulls/signoz/frontend?label=Downloads"> </a>

|

||||

<img alt="GitHub issues" src="https://img.shields.io/github/issues/signoz/signoz"> </a>

|

||||

<a href="https://twitter.com/intent/tweet?text=Monitor%20your%20applications%20and%20troubleshoot%20problems%20with%20SigNoz,%20an%20open-source%20alternative%20to%20DataDog,%20NewRelic.&url=https://signoz.io/&via=SigNozHQ&hashtags=opensource,signoz,observability">

|

||||

<img alt="tweet" src="https://img.shields.io/twitter/url/http/shields.io.svg?style=social"> </a>

|

||||

</p>

|

||||

|

||||

##

|

||||

|

||||

SigNoz is an opensource observability platform. SigNoz uses distributed tracing to gain visibility into your systems and powers data using [Kafka](https://kafka.apache.org/) (to handle high ingestion rate and backpressure) and [Apache Druid](https://druid.apache.org/) (Apache Druid is a high performance real-time analytics database), both proven in the industry to handle scale.

|

||||

SigNoz helps developers monitor applications and troubleshoot problems in their deployed applications. SigNoz uses distributed tracing to gain visibility into your software stack.

|

||||

|

||||

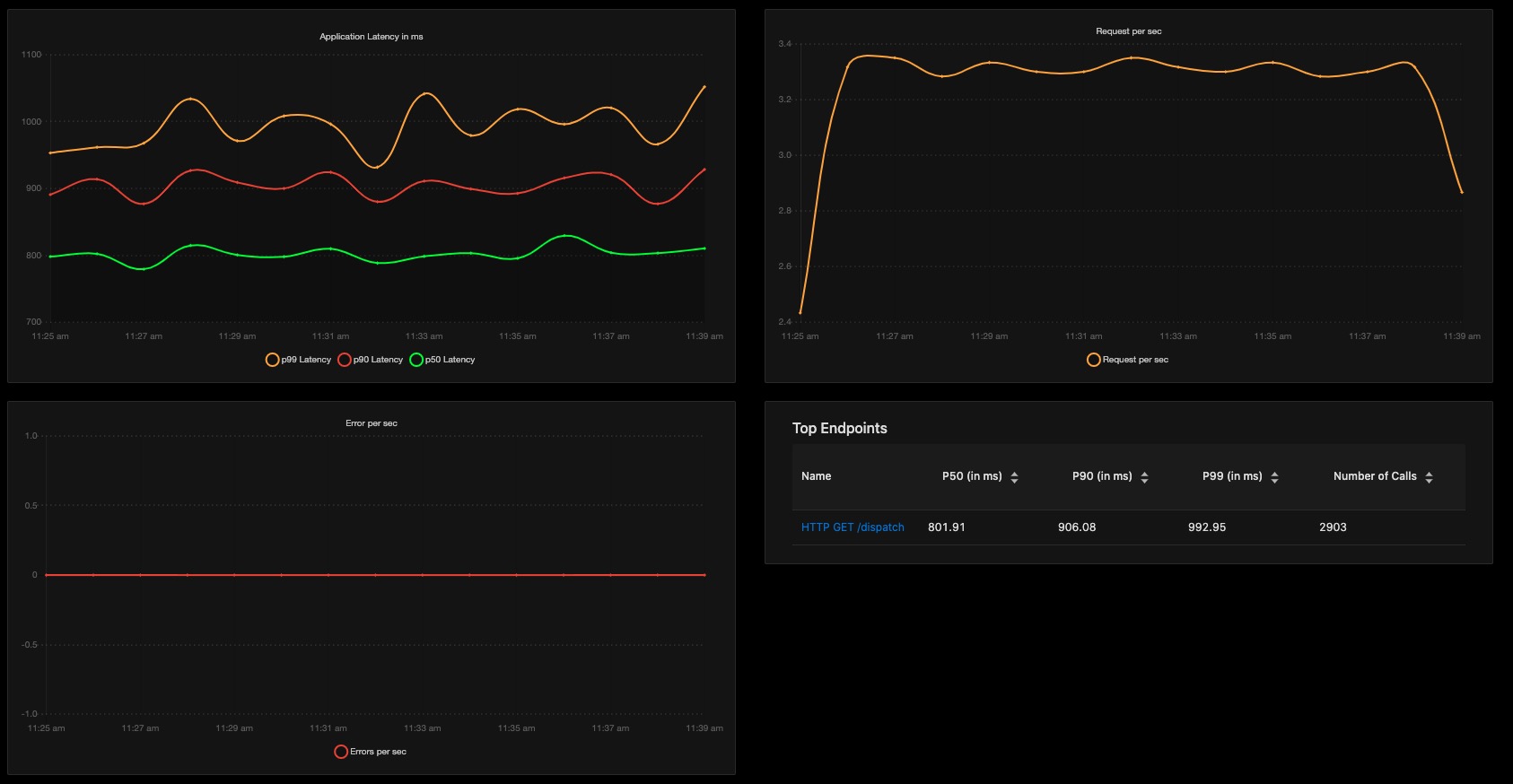

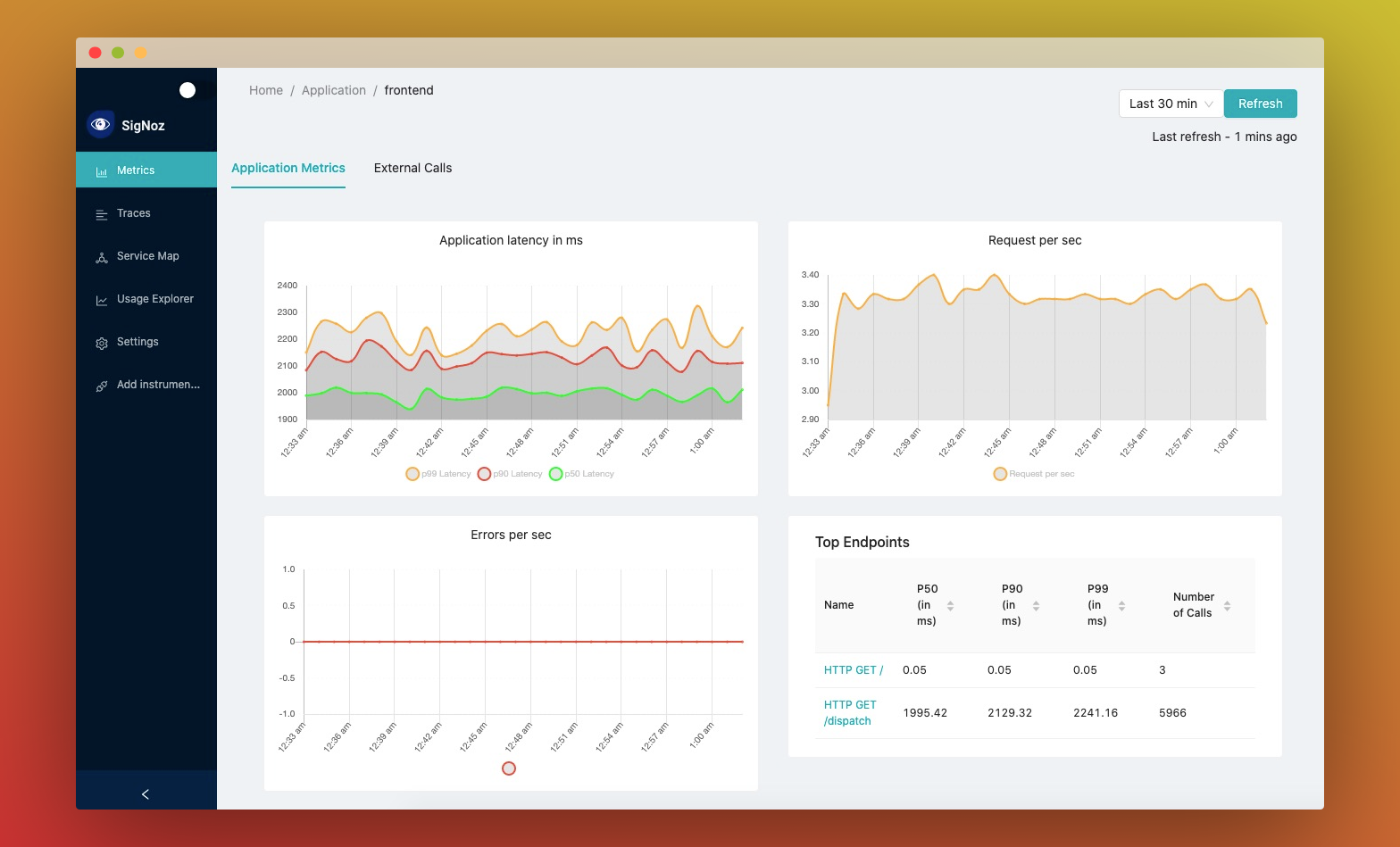

👉 You can see metrics like p99 latency, error rates for your services, external API calls and individual end points.

|

||||

|

||||

👉 You can find the root cause of the problem by going to the exact traces which are causing the problem and see detailed flamegraphs of individual request traces.

|

||||

|

||||

<!--  -->

|

||||

|

||||

|

||||

|

||||

### Features:

|

||||

### 👇 Features:

|

||||

|

||||

- Application overview metrics like RPS, 50th/90th/99th Percentile latencies, and Error Rate

|

||||

- Slowest endpoints in your application

|

||||

@@ -22,16 +32,25 @@ SigNoz is an opensource observability platform. SigNoz uses distributed tracing

|

||||

- Filter traces by service name, operation, latency, error, tags/annotations.

|

||||

- Aggregate metrics on filtered traces. Eg, you can get error rate and 99th percentile latency of `customer_type: gold` or `deployment_version: v2` or `external_call: paypal`

|

||||

- Unified UI for metrics and traces. No need to switch from Prometheus to Jaeger to debug issues.

|

||||

- In-built workflows to reduce your efforts in detecting common issues like new deployment failures, 3rd party slow APIs, etc (Coming Soon)

|

||||

- Anomaly Detection Framework (Coming Soon)

|

||||

|

||||

### Motivation:

|

||||

### 🤓 Why SigNoz?

|

||||

|

||||

- SaaS vendors charge an insane amount to provide Application Monitoring. They often surprise you with huge month end bills without any transparency of data sent to them.

|

||||

- Data privacy and compliance demands data to not leave the network boundary

|

||||

- Highly scalable architecture

|

||||

- No more magic happening in agents installed in your infra. You take control of sampling, uptime, configuration.

|

||||

- Build modules over SigNoz to extend business specific capabilities

|

||||

Being developers, we found it annoying to rely on closed source SaaS vendors for every small feature we wanted. Closed source vendors often surprise you with huge month end bills without any transparency.

|

||||

|

||||

We wanted to make a self-hosted & open source version of tools like DataDog, NewRelic for companies that have privacy and security concerns about having customer data going to third party services.

|

||||

|

||||

Being open source also gives you complete control of your configuration, sampling, uptimes. You can also build modules over SigNoz to extend business specific capabilities

|

||||

|

||||

### 👊🏻 Languages supported:

|

||||

|

||||

We support [OpenTelemetry](https://opentelemetry.io) as the library which you can use to instrument your applications. So any framework and language supported by OpenTelemetry is also supported by SigNoz. Some of the main supported languages are:

|

||||

|

||||

- Java

|

||||

- Python

|

||||

- NodeJS

|

||||

- Go

|

||||

|

||||

You can find the complete list of languages here - https://opentelemetry.io/docs/

|

||||

|

||||

# Getting Started

|

||||

|

||||

@@ -41,9 +60,9 @@ We have a tiny-cluster setup and a standard setup to deploy using docker-compose

|

||||

Follow the steps listed at https://signoz.io/docs/deployment/docker/.

|

||||

The troubleshooting instructions at https://signoz.io/docs/deployment/docker/#troubleshooting may be helpful

|

||||

|

||||

## Deploy in Kubernetes using Helm.

|

||||

## Deploy in Kubernetes using Helm

|

||||

|

||||

Below steps will install the SigNoz in platform namespace inside your k8s cluster.

|

||||

Below steps will install the SigNoz in `platform` namespace inside your k8s cluster.

|

||||

|

||||

```console

|

||||

git clone https://github.com/SigNoz/signoz.git && cd signoz

|

||||

|

||||

517

deploy/docker/clickhouse-setup/clickhouse-config.xml

Normal file

517

deploy/docker/clickhouse-setup/clickhouse-config.xml

Normal file

@@ -0,0 +1,517 @@

|

||||

<?xml version="1.0"?>

|

||||

<yandex>

|

||||

<logger>

|

||||

<level>trace</level>

|

||||

<log>/var/log/clickhouse-server/clickhouse-server.log</log>

|

||||

<errorlog>/var/log/clickhouse-server/clickhouse-server.err.log</errorlog>

|

||||

<size>1000M</size>

|

||||

<count>10</count>

|

||||

</logger>

|

||||

|

||||

<http_port>8123</http_port>

|

||||

<tcp_port>9000</tcp_port>

|

||||

|

||||

<!-- For HTTPS and SSL over native protocol. -->

|

||||

<!--

|

||||

<https_port>8443</https_port>

|

||||

<tcp_ssl_port>9440</tcp_ssl_port>

|

||||

-->

|

||||

|

||||

<!-- Used with https_port and tcp_ssl_port. Full ssl options list: https://github.com/yandex/ClickHouse/blob/master/contrib/libpoco/NetSSL_OpenSSL/include/Poco/Net/SSLManager.h#L71 -->

|

||||

<openSSL>

|

||||

<server> <!-- Used for https server AND secure tcp port -->

|

||||

<!-- openssl req -subj "/CN=localhost" -new -newkey rsa:2048 -days 365 -nodes -x509 -keyout /etc/clickhouse-server/server.key -out /etc/clickhouse-server/server.crt -->

|

||||

<certificateFile>/etc/clickhouse-server/server.crt</certificateFile>

|

||||

<privateKeyFile>/etc/clickhouse-server/server.key</privateKeyFile>

|

||||

<!-- openssl dhparam -out /etc/clickhouse-server/dhparam.pem 4096 -->

|

||||

<dhParamsFile>/etc/clickhouse-server/dhparam.pem</dhParamsFile>

|

||||

<verificationMode>none</verificationMode>

|

||||

<loadDefaultCAFile>true</loadDefaultCAFile>

|

||||

<cacheSessions>true</cacheSessions>

|

||||

<disableProtocols>sslv2,sslv3</disableProtocols>

|

||||

<preferServerCiphers>true</preferServerCiphers>

|

||||

</server>

|

||||

|

||||

<client> <!-- Used for connecting to https dictionary source -->

|

||||

<loadDefaultCAFile>true</loadDefaultCAFile>

|

||||

<cacheSessions>true</cacheSessions>

|

||||

<disableProtocols>sslv2,sslv3</disableProtocols>

|

||||

<preferServerCiphers>true</preferServerCiphers>

|

||||

<!-- Use for self-signed: <verificationMode>none</verificationMode> -->

|

||||

<invalidCertificateHandler>

|

||||

<!-- Use for self-signed: <name>AcceptCertificateHandler</name> -->

|

||||

<name>RejectCertificateHandler</name>

|

||||

</invalidCertificateHandler>

|

||||

</client>

|

||||

</openSSL>

|

||||

|

||||

<!-- Default root page on http[s] server. For example load UI from https://tabix.io/ when opening http://localhost:8123 -->

|

||||

<!--

|

||||

<http_server_default_response><![CDATA[<html ng-app="SMI2"><head><base href="http://ui.tabix.io/"></head><body><div ui-view="" class="content-ui"></div><script src="http://loader.tabix.io/master.js"></script></body></html>]]></http_server_default_response>

|

||||

-->

|

||||

|

||||

<!-- Port for communication between replicas. Used for data exchange. -->

|

||||

<interserver_http_port>9009</interserver_http_port>

|

||||

|

||||

<!-- Hostname that is used by other replicas to request this server.

|

||||

If not specified, than it is determined analoguous to 'hostname -f' command.

|

||||

This setting could be used to switch replication to another network interface.

|

||||

-->

|

||||

<!--

|

||||

<interserver_http_host>example.yandex.ru</interserver_http_host>

|

||||

-->

|

||||

|

||||

<!-- Listen specified host. use :: (wildcard IPv6 address), if you want to accept connections both with IPv4 and IPv6 from everywhere. -->

|

||||

<listen_host>::</listen_host>

|

||||

<!-- Same for hosts with disabled ipv6: -->

|

||||

<!-- <listen_host>0.0.0.0</listen_host> -->

|

||||

|

||||

<!-- Default values - try listen localhost on ipv4 and ipv6: -->

|

||||

<!-- <listen_host>0.0.0.0</listen_host> -->

|

||||

|

||||

<max_connections>4096</max_connections>

|

||||

<keep_alive_timeout>3</keep_alive_timeout>

|

||||

|

||||

<!-- Maximum number of concurrent queries. -->

|

||||

<max_concurrent_queries>100</max_concurrent_queries>

|

||||

|

||||

<!-- Set limit on number of open files (default: maximum). This setting makes sense on Mac OS X because getrlimit() fails to retrieve

|

||||

correct maximum value. -->

|

||||

<!-- <max_open_files>262144</max_open_files> -->

|

||||

|

||||

<!-- Size of cache of uncompressed blocks of data, used in tables of MergeTree family.

|

||||

In bytes. Cache is single for server. Memory is allocated only on demand.

|

||||

Cache is used when 'use_uncompressed_cache' user setting turned on (off by default).

|

||||

Uncompressed cache is advantageous only for very short queries and in rare cases.

|

||||

-->

|

||||

<uncompressed_cache_size>8589934592</uncompressed_cache_size>

|

||||

|

||||

<!-- Approximate size of mark cache, used in tables of MergeTree family.

|

||||

In bytes. Cache is single for server. Memory is allocated only on demand.

|

||||

You should not lower this value.

|

||||

-->

|

||||

<mark_cache_size>5368709120</mark_cache_size>

|

||||

|

||||

|

||||

<!-- Path to data directory, with trailing slash. -->

|

||||

<path>/var/lib/clickhouse/</path>

|

||||

|

||||

<!-- Path to temporary data for processing hard queries. -->

|

||||

<tmp_path>/var/lib/clickhouse/tmp/</tmp_path>

|

||||

|

||||

<!-- Path to configuration file with users, access rights, profiles of settings, quotas. -->

|

||||

<users_config>users.xml</users_config>

|

||||

|

||||

<!-- Default profile of settings.. -->

|

||||

<default_profile>default</default_profile>

|

||||

|

||||

<!-- Default database. -->

|

||||

<default_database>default</default_database>

|

||||

|

||||

<!-- Server time zone could be set here.

|

||||

|

||||

Time zone is used when converting between String and DateTime types,

|

||||

when printing DateTime in text formats and parsing DateTime from text,

|

||||

it is used in date and time related functions, if specific time zone was not passed as an argument.

|

||||

|

||||

Time zone is specified as identifier from IANA time zone database, like UTC or Africa/Abidjan.

|

||||

If not specified, system time zone at server startup is used.

|

||||

|

||||

Please note, that server could display time zone alias instead of specified name.

|

||||

Example: W-SU is an alias for Europe/Moscow and Zulu is an alias for UTC.

|

||||

-->

|

||||

<!-- <timezone>Europe/Moscow</timezone> -->

|

||||

|

||||

<!-- You can specify umask here (see "man umask"). Server will apply it on startup.

|

||||

Number is always parsed as octal. Default umask is 027 (other users cannot read logs, data files, etc; group can only read).

|

||||

-->

|

||||

<!-- <umask>022</umask> -->

|

||||

|

||||

<!-- Configuration of clusters that could be used in Distributed tables.

|

||||

https://clickhouse.yandex/reference_en.html#Distributed

|

||||

-->

|

||||

<remote_servers incl="clickhouse_remote_servers" >

|

||||

<!-- Test only shard config for testing distributed storage -->

|

||||

<test_shard_localhost>

|

||||

<shard>

|

||||

<replica>

|

||||

<host>localhost</host>

|

||||

<port>9000</port>

|

||||

</replica>

|

||||

</shard>

|

||||

</test_shard_localhost>

|

||||

</remote_servers>

|

||||

|

||||

|

||||

<!-- If element has 'incl' attribute, then for it's value will be used corresponding substitution from another file.

|

||||

By default, path to file with substitutions is /etc/metrika.xml. It could be changed in config in 'include_from' element.

|

||||

Values for substitutions are specified in /yandex/name_of_substitution elements in that file.

|

||||

-->

|

||||

|

||||

<!-- ZooKeeper is used to store metadata about replicas, when using Replicated tables.

|

||||

Optional. If you don't use replicated tables, you could omit that.

|

||||

|

||||

See https://clickhouse.yandex/reference_en.html#Data%20replication

|

||||

-->

|

||||

<zookeeper incl="zookeeper-servers" optional="true" />

|

||||

|

||||

<!-- Substitutions for parameters of replicated tables.

|

||||

Optional. If you don't use replicated tables, you could omit that.

|

||||

|

||||

See https://clickhouse.yandex/reference_en.html#Creating%20replicated%20tables

|

||||

-->

|

||||

<macros incl="macros" optional="true" />

|

||||

|

||||

|

||||

<!-- Reloading interval for embedded dictionaries, in seconds. Default: 3600. -->

|

||||

<builtin_dictionaries_reload_interval>3600</builtin_dictionaries_reload_interval>

|

||||

|

||||

|

||||

<!-- Maximum session timeout, in seconds. Default: 3600. -->

|

||||

<max_session_timeout>3600</max_session_timeout>

|

||||

|

||||

<!-- Default session timeout, in seconds. Default: 60. -->

|

||||

<default_session_timeout>60</default_session_timeout>

|

||||

|

||||

<!-- Sending data to Graphite for monitoring. Several sections can be defined. -->

|

||||

<!--

|

||||

interval - send every X second

|

||||

root_path - prefix for keys

|

||||

hostname_in_path - append hostname to root_path (default = true)

|

||||

metrics - send data from table system.metrics

|

||||

events - send data from table system.events

|

||||

asynchronous_metrics - send data from table system.asynchronous_metrics

|

||||

-->

|

||||

<!--

|

||||

<graphite>

|

||||

<host>localhost</host>

|

||||

<port>42000</port>

|

||||

<timeout>0.1</timeout>

|

||||

<interval>60</interval>

|

||||

<root_path>one_min</root_path>

|

||||

<hostname_in_path>true<hostname_in_path>

|

||||

|

||||

<metrics>true</metrics>

|

||||

<events>true</events>

|

||||

<asynchronous_metrics>true</asynchronous_metrics>

|

||||

</graphite>

|

||||

<graphite>

|

||||

<host>localhost</host>

|

||||

<port>42000</port>

|

||||

<timeout>0.1</timeout>

|

||||

<interval>1</interval>

|

||||

<root_path>one_sec</root_path>

|

||||

|

||||

<metrics>true</metrics>

|

||||

<events>true</events>

|

||||

<asynchronous_metrics>false</asynchronous_metrics>

|

||||

</graphite>

|

||||

-->

|

||||

|

||||

|

||||

<!-- Query log. Used only for queries with setting log_queries = 1. -->

|

||||

<query_log>

|

||||

<!-- What table to insert data. If table is not exist, it will be created.

|

||||

When query log structure is changed after system update,

|

||||

then old table will be renamed and new table will be created automatically.

|

||||

-->

|

||||

<database>system</database>

|

||||

<table>query_log</table>

|

||||

|

||||

<!-- Interval of flushing data. -->

|

||||

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

|

||||

</query_log>

|

||||

|

||||

|

||||

<!-- Uncomment if use part_log

|

||||

<part_log>

|

||||

<database>system</database>

|

||||

<table>part_log</table>

|

||||

|

||||

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

|

||||

</part_log>

|

||||

-->

|

||||

|

||||

|

||||

<!-- Parameters for embedded dictionaries, used in Yandex.Metrica.

|

||||

See https://clickhouse.yandex/reference_en.html#Internal%20dictionaries

|

||||

-->

|

||||

|

||||

<!-- Path to file with region hierarchy. -->

|

||||

<!-- <path_to_regions_hierarchy_file>/opt/geo/regions_hierarchy.txt</path_to_regions_hierarchy_file> -->

|

||||

|

||||

<!-- Path to directory with files containing names of regions -->

|

||||

<!-- <path_to_regions_names_files>/opt/geo/</path_to_regions_names_files> -->

|

||||

|

||||

|

||||

<!-- Configuration of external dictionaries. See:

|

||||

https://clickhouse.yandex/reference_en.html#External%20Dictionaries

|

||||

-->

|

||||

<dictionaries_config>*_dictionary.xml</dictionaries_config>

|

||||

|

||||

<!-- Uncomment if you want data to be compressed 30-100% better.

|

||||

Don't do that if you just started using ClickHouse.

|

||||

-->

|

||||

<compression incl="clickhouse_compression">

|

||||

<!--

|

||||

<!- - Set of variants. Checked in order. Last matching case wins. If nothing matches, lz4 will be used. - ->

|

||||

<case>

|

||||

|

||||

<!- - Conditions. All must be satisfied. Some conditions may be omitted. - ->

|

||||

<min_part_size>10000000000</min_part_size> <!- - Min part size in bytes. - ->

|

||||

<min_part_size_ratio>0.01</min_part_size_ratio> <!- - Min size of part relative to whole table size. - ->

|

||||

|

||||

<!- - What compression method to use. - ->

|

||||

<method>zstd</method>

|

||||

</case>

|

||||

-->

|

||||

</compression>

|

||||

|

||||

<!-- Allow to execute distributed DDL queries (CREATE, DROP, ALTER, RENAME) on cluster.

|

||||

Works only if ZooKeeper is enabled. Comment it if such functionality isn't required. -->

|

||||

<distributed_ddl>

|

||||

<!-- Path in ZooKeeper to queue with DDL queries -->

|

||||

<path>/clickhouse/task_queue/ddl</path>

|

||||

</distributed_ddl>

|

||||

|

||||

<!-- Settings to fine tune MergeTree tables. See documentation in source code, in MergeTreeSettings.h -->

|

||||

<!--

|

||||

<merge_tree>

|

||||

<max_suspicious_broken_parts>5</max_suspicious_broken_parts>

|

||||

</merge_tree>

|

||||

-->

|

||||

|

||||

<!-- Protection from accidental DROP.

|

||||

If size of a MergeTree table is greater than max_table_size_to_drop (in bytes) than table could not be dropped with any DROP query.

|

||||

If you want do delete one table and don't want to restart clickhouse-server, you could create special file <clickhouse-path>/flags/force_drop_table and make DROP once.

|

||||

By default max_table_size_to_drop is 50GB, max_table_size_to_drop=0 allows to DROP any tables.

|

||||

Uncomment to disable protection.

|

||||

-->

|

||||

<!-- <max_table_size_to_drop>0</max_table_size_to_drop> -->

|

||||

|

||||

<!-- Example of parameters for GraphiteMergeTree table engine -->

|

||||

<graphite_rollup>

|

||||

<!-- carbon -->

|

||||

<pattern>

|

||||

<regexp>^carbon\.</regexp>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>60</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>7776000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>10368000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>34560000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>94608000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</pattern>

|

||||

<!-- collectd -->

|

||||

<pattern>

|

||||

<regexp>^collectd\.</regexp>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>10</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>43200</age>

|

||||

<precision>60</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>864000</age>

|

||||

<precision>900</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>1728000</age>

|

||||

<precision>1800</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>3456000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>10368000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>34560000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>94608000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</pattern>

|

||||

<!-- high -->

|

||||

<pattern>

|

||||

<regexp>^high\.</regexp>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>10</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>172800</age>

|

||||

<precision>60</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>864000</age>

|

||||

<precision>900</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>1728000</age>

|

||||

<precision>1800</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>3456000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>10368000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>34560000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>94608000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</pattern>

|

||||

<!-- medium -->

|

||||

<pattern>

|

||||

<regexp>^medium\.</regexp>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>60</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>864000</age>

|

||||

<precision>900</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>1728000</age>

|

||||

<precision>1800</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>3456000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>10368000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>34560000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>94608000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</pattern>

|

||||

<!-- low -->

|

||||

<pattern>

|

||||

<regexp>^low\.</regexp>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>15552000</age>

|

||||

<precision>1800</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>31536000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>126144000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>252288000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>315360000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</pattern>

|

||||

<!-- default -->

|

||||

<default>

|

||||

<function>any</function>

|

||||

<retention>

|

||||

<age>0</age>

|

||||

<precision>60</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>864000</age>

|

||||

<precision>900</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>1728000</age>

|

||||

<precision>1800</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>3456000</age>

|

||||

<precision>3600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>10368000</age>

|

||||

<precision>21600</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>34560000</age>

|

||||

<precision>43200</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>63072000</age>

|

||||

<precision>86400</precision>

|

||||

</retention>

|

||||

<retention>

|

||||

<age>94608000</age>

|

||||

<precision>604800</precision>

|

||||

</retention>

|

||||

</default>

|

||||

</graphite_rollup>

|

||||

|

||||

<!-- Directory in <clickhouse-path> containing schema files for various input formats.

|

||||

The directory will be created if it doesn't exist.

|

||||

-->

|

||||

<format_schema_path>/var/lib/clickhouse/format_schemas/</format_schema_path>

|

||||

</yandex>

|

||||

97

deploy/docker/clickhouse-setup/docker-compose.yaml

Normal file

97

deploy/docker/clickhouse-setup/docker-compose.yaml

Normal file

@@ -0,0 +1,97 @@

|

||||

version: "2.4"

|

||||

|

||||

services:

|

||||

clickhouse:

|

||||

image: yandex/clickhouse-server

|

||||

expose:

|

||||

- 8123

|

||||

- 9000

|

||||

ports:

|

||||

- 9001:9000

|

||||

- 8123:8123

|

||||

volumes:

|

||||

- ./clickhouse-config.xml:/etc/clickhouse-server/config.xml

|

||||

- ./docker-entrypoint-initdb.d/init-db.sql:/docker-entrypoint-initdb.d/init-db.sql

|

||||

healthcheck:

|

||||

# "clickhouse", "client", "-u ${CLICKHOUSE_USER}", "--password ${CLICKHOUSE_PASSWORD}", "-q 'SELECT 1'"

|

||||

test: ["CMD", "wget", "--spider", "-q", "localhost:8123/ping"]

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 3

|

||||

|

||||

query-service:

|

||||

image: signoz/query-service:0.3.1

|

||||

container_name: query-service

|

||||

|

||||

ports:

|

||||

- "8080:8080"

|

||||

|

||||

environment:

|

||||

- ClickHouseUrl=tcp://clickhouse:9000

|

||||

- STORAGE=clickhouse

|

||||

- POSTHOG_API_KEY=H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w

|

||||

|

||||

depends_on:

|

||||

clickhouse:

|

||||

condition: service_healthy

|

||||

|

||||

frontend:

|

||||

image: signoz/frontend:0.3.2

|

||||

container_name: frontend

|

||||

|

||||

depends_on:

|

||||

- query-service

|

||||

links:

|

||||

- "query-service"

|

||||

ports:

|

||||

- "3000:3000"

|

||||

volumes:

|

||||

- ../common/nginx-config.conf:/etc/nginx/conf.d/default.conf

|

||||

|

||||

|

||||

otel-collector:

|

||||

image: signoz/otelcol:latest

|

||||

command: ["--config=/etc/otel-collector-config.yaml", "--mem-ballast-size-mib=683"]

|

||||

volumes:

|

||||

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

|

||||

ports:

|

||||

- "1777:1777" # pprof extension

|

||||

- "8887:8888" # Prometheus metrics exposed by the agent

|

||||

- "14268:14268" # Jaeger receiver

|

||||

- "55678" # OpenCensus receiver

|

||||

- "55680:55680" # OTLP HTTP/2.0 legacy port

|

||||

- "55681:55681" # OTLP HTTP/1.0 receiver

|

||||

- "4317:4317" # OTLP GRPC receiver

|

||||

- "55679:55679" # zpages extension

|

||||

- "13133" # health_check

|

||||

|

||||

depends_on:

|

||||

clickhouse:

|

||||

condition: service_healthy

|

||||

|

||||

hotrod:

|

||||

image: jaegertracing/example-hotrod:latest

|

||||

container_name: hotrod

|

||||

ports:

|

||||

- "9000:8080"

|

||||

command: ["all"]

|

||||

environment:

|

||||

- JAEGER_ENDPOINT=http://otel-collector:14268/api/traces

|

||||

|

||||

|

||||

load-hotrod:

|

||||

image: "grubykarol/locust:1.2.3-python3.9-alpine3.12"

|

||||

container_name: load-hotrod

|

||||

hostname: load-hotrod

|

||||

ports:

|

||||

- "8089:8089"

|

||||

environment:

|

||||

ATTACKED_HOST: http://hotrod:8080

|

||||

LOCUST_MODE: standalone

|

||||

NO_PROXY: standalone

|

||||

TASK_DELAY_FROM: 5

|

||||

TASK_DELAY_TO: 30

|

||||

QUIET_MODE: "${QUIET_MODE:-false}"

|

||||

LOCUST_OPTS: "--headless -u 10 -r 1"

|

||||

volumes:

|

||||

- ../common/locust-scripts:/locust

|

||||

@@ -0,0 +1,27 @@

|

||||

CREATE TABLE IF NOT EXISTS signoz_index (

|

||||

timestamp DateTime64(9) CODEC(Delta, ZSTD(1)),

|

||||

traceID String CODEC(ZSTD(1)),

|

||||

spanID String CODEC(ZSTD(1)),

|

||||

parentSpanID String CODEC(ZSTD(1)),

|

||||

serviceName LowCardinality(String) CODEC(ZSTD(1)),

|

||||

name LowCardinality(String) CODEC(ZSTD(1)),

|

||||

kind Int32 CODEC(ZSTD(1)),

|

||||

durationNano UInt64 CODEC(ZSTD(1)),

|

||||

tags Array(String) CODEC(ZSTD(1)),

|

||||

tagsKeys Array(String) CODEC(ZSTD(1)),

|

||||

tagsValues Array(String) CODEC(ZSTD(1)),

|

||||

statusCode Int64 CODEC(ZSTD(1)),

|

||||

references String CODEC(ZSTD(1)),

|

||||

externalHttpMethod Nullable(String) CODEC(ZSTD(1)),

|

||||

externalHttpUrl Nullable(String) CODEC(ZSTD(1)),

|

||||

component Nullable(String) CODEC(ZSTD(1)),

|

||||

dbSystem Nullable(String) CODEC(ZSTD(1)),

|

||||

dbName Nullable(String) CODEC(ZSTD(1)),

|

||||

dbOperation Nullable(String) CODEC(ZSTD(1)),

|

||||

peerService Nullable(String) CODEC(ZSTD(1)),

|

||||

INDEX idx_tagsKeys tagsKeys TYPE bloom_filter(0.01) GRANULARITY 64,

|

||||

INDEX idx_tagsValues tagsValues TYPE bloom_filter(0.01) GRANULARITY 64,

|

||||

INDEX idx_duration durationNano TYPE minmax GRANULARITY 1

|

||||

) ENGINE MergeTree()

|

||||

PARTITION BY toDate(timestamp)

|

||||

ORDER BY (serviceName, -toUnixTimestamp(timestamp))

|

||||

39

deploy/docker/clickhouse-setup/otel-collector-config.yaml

Normal file

39

deploy/docker/clickhouse-setup/otel-collector-config.yaml

Normal file

@@ -0,0 +1,39 @@

|

||||

receivers:

|

||||

otlp:

|

||||

protocols:

|

||||

grpc:

|

||||

http:

|

||||

jaeger:

|

||||

protocols:

|

||||

grpc:

|

||||

thrift_http:

|

||||

processors:

|

||||

batch:

|

||||

send_batch_size: 1000

|

||||

timeout: 10s

|

||||

memory_limiter:

|

||||

# Same as --mem-ballast-size-mib CLI argument

|

||||

ballast_size_mib: 683

|

||||

# 80% of maximum memory up to 2G

|

||||

limit_mib: 1500

|

||||

# 25% of limit up to 2G

|

||||

spike_limit_mib: 512

|

||||

check_interval: 5s

|

||||

# queued_retry:

|

||||

# num_workers: 4

|

||||

# queue_size: 100

|

||||

# retry_on_failure: true

|

||||

extensions:

|

||||

health_check: {}

|

||||

zpages: {}

|

||||

exporters:

|

||||

clickhouse:

|

||||

datasource: tcp://clickhouse:9000

|

||||

|

||||

service:

|

||||

extensions: [health_check, zpages]

|

||||

pipelines:

|

||||

traces:

|

||||

receivers: [jaeger, otlp]

|

||||

processors: [batch]

|

||||

exporters: [clickhouse]

|

||||

@@ -1,6 +1,16 @@

|

||||

server {

|

||||

listen 3000;

|

||||

server_name _;

|

||||

|

||||

gzip on;

|

||||

gzip_static on;

|

||||

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript;

|

||||

gzip_proxied any;

|

||||

gzip_vary on;

|

||||

gzip_comp_level 6;

|

||||

gzip_buffers 16 8k;

|

||||

gzip_http_version 1.1;

|

||||

|

||||

location / {

|

||||

root /usr/share/nginx/html;

|

||||

index index.html index.htm;

|

||||

@@ -140,6 +140,11 @@ services:

|

||||

env_file:

|

||||

- environment_tiny/router

|

||||

- environment_tiny/common

|

||||

healthcheck:

|

||||

test: ["CMD", "wget", "--spider", "-q", "http://router:8888/druid/coordinator/v1/datasources/flattened_spans"]

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 5

|

||||

|

||||

flatten-processor:

|

||||

image: signoz/flattener-processor:0.2.0

|

||||

@@ -158,7 +163,7 @@ services:

|

||||

|

||||

|

||||

query-service:

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.2.0

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.3.1

|

||||

container_name: query-service

|

||||

|

||||

depends_on:

|

||||

@@ -169,11 +174,15 @@ services:

|

||||

environment:

|

||||

- DruidClientUrl=http://router:8888

|

||||

- DruidDatasource=flattened_spans

|

||||

- STORAGE=druid

|

||||

- POSTHOG_API_KEY=H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w

|

||||

|

||||

|

||||

depends_on:

|

||||

router:

|

||||

condition: service_healthy

|

||||

|

||||

frontend:

|

||||

image: signoz/frontend:0.2.1

|

||||

image: signoz/frontend:0.3.2

|

||||

container_name: frontend

|

||||

|

||||

depends_on:

|

||||

@@ -183,7 +192,7 @@ services:

|

||||

ports:

|

||||

- "3000:3000"

|

||||

volumes:

|

||||

- ./nginx-config.conf:/etc/nginx/conf.d/default.conf

|

||||

- ../common/nginx-config.conf:/etc/nginx/conf.d/default.conf

|

||||

|

||||

create-supervisor:

|

||||

image: theithollow/hollowapp-blog:curl

|

||||

@@ -260,5 +269,5 @@ services:

|

||||

QUIET_MODE: "${QUIET_MODE:-false}"

|

||||

LOCUST_OPTS: "--headless -u 10 -r 1"

|

||||

volumes:

|

||||

- ./locust-scripts:/locust

|

||||

- ../common/locust-scripts:/locust

|

||||

|

||||

@@ -135,6 +135,11 @@ services:

|

||||

- router

|

||||

env_file:

|

||||

- environment_small/router

|

||||

healthcheck:

|

||||

test: ["CMD", "wget", "--spider", "-q", "http://router:8888/druid/coordinator/v1/datasources/flattened_spans"]

|

||||

interval: 30s

|

||||

timeout: 5s

|

||||

retries: 5

|

||||

|

||||

flatten-processor:

|

||||

image: signoz/flattener-processor:0.2.0

|

||||

@@ -153,7 +158,7 @@ services:

|

||||

|

||||

|

||||

query-service:

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.2.0

|

||||

image: signoz.docker.scarf.sh/signoz/query-service:0.3.1

|

||||

container_name: query-service

|

||||

|

||||

depends_on:

|

||||

@@ -164,11 +169,15 @@ services:

|

||||

environment:

|

||||

- DruidClientUrl=http://router:8888

|

||||

- DruidDatasource=flattened_spans

|

||||

- STORAGE=druid

|

||||

- POSTHOG_API_KEY=H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w

|

||||

|

||||

depends_on:

|

||||

router:

|

||||

condition: service_healthy

|

||||

|

||||

frontend:

|

||||

image: signoz/frontend:0.2.1

|

||||

image: signoz/frontend:0.3.2

|

||||

container_name: frontend

|

||||

|

||||

depends_on:

|

||||

26

deploy/docker/druid-kafka-setup/environment_tiny/common_test

Normal file

26

deploy/docker/druid-kafka-setup/environment_tiny/common_test

Normal file

@@ -0,0 +1,26 @@

|

||||

# For S3 storage

|

||||

|

||||

druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service", "druid-s3-extensions"]

|

||||

|

||||

|

||||

druid_storage_type=s3

|

||||

druid_storage_bucket=solvzy-test3

|

||||

druid_storage_baseKey=druid/segments

|

||||

|

||||

AWS_ACCESS_KEY_ID=AKIARKCF5OX3CMI3XRXC

|

||||

AWS_SECRET_ACCESS_KEY=KxuYpczA7a3IQ44U7Bd7DI+LZgJ26tmKr2cnkEVB

|

||||

AWS_REGION=us-east-2

|

||||

|

||||

druid_indexer_logs_type=s3

|

||||

druid_indexer_logs_s3Bucket=solvzy-test3

|

||||

druid_indexer_logs_s3Prefix=druid/indexing-logs

|

||||

|

||||

# -----------------------------------------------------------

|

||||

# For local storage

|

||||

# druid_extensions_loadList=["druid-histogram", "druid-datasketches", "druid-lookups-cached-global", "postgresql-metadata-storage", "druid-kafka-indexing-service"]

|

||||

|

||||

# druid_storage_type=local

|

||||

# druid_storage_storageDirectory=/opt/data/segments

|

||||

# druid_indexer_logs_type=file

|

||||

# druid_indexer_logs_directory=/opt/data/indexing-logs

|

||||

|

||||

@@ -1,256 +0,0 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -o errexit

|

||||

|

||||

is_command_present() {

|

||||

type "$1" >/dev/null 2>&1

|

||||

}

|

||||

|

||||

is_mac() {

|

||||

[[ $OSTYPE == darwin* ]]

|

||||

}

|

||||

|

||||

|

||||

check_k8s_setup() {

|

||||

echo "Checking your k8s setup status"

|

||||

if ! is_command_present kubectl; then

|

||||

echo "Please install kubectl on your machine"

|

||||

exit 1

|

||||

else

|

||||

|

||||

if ! is_command_present jq; then

|

||||

install_jq

|

||||

fi

|

||||

clusters=`kubectl config view -o json | jq -r '."current-context"'`

|

||||

if [[ ! -n $clusters ]]; then

|

||||

echo "Please setup a k8s cluster & config kubectl to connect to it"

|

||||

exit 1

|

||||

fi

|

||||

k8s_minor_version=`kubectl version --short -o json | jq ."serverVersion.minor" | sed 's/[^0-9]*//g'`

|

||||

# if [[ $k8s_minor_version < 18 ]]; then

|

||||

# echo "+++++++++++ ERROR ++++++++++++++++++++++"

|

||||

# echo "SigNoz deployments require Kubernetes >= v1.18. Found version: v1.$k8s_minor_version"

|

||||

# echo "+++++++++++ ++++++++++++++++++++++++++++"

|

||||

# exit 1

|

||||

# fi;

|

||||

fi

|

||||

}

|

||||

|

||||

install_jq(){

|

||||

if [ $package_manager == "brew" ]; then

|

||||

brew install jq

|

||||

elif [ $package_manager == "yum" ]; then

|

||||

yum_cmd="sudo yum --assumeyes --quiet"

|

||||

$yum_cmd install jq

|

||||

else

|

||||

apt_cmd="sudo apt-get --yes --quiet"

|

||||

$apt_cmd update

|

||||

$apt_cmd install jq

|

||||

fi

|

||||

}

|

||||

|

||||

|

||||

check_os() {

|

||||

if is_mac; then

|

||||

package_manager="brew"

|

||||

desired_os=1

|

||||

os="Mac"

|

||||

return

|

||||

fi

|

||||

|

||||

os_name="$(cat /etc/*-release | awk -F= '$1 == "NAME" { gsub(/"/, ""); print $2; exit }')"

|

||||

|

||||

case "$os_name" in

|

||||

Ubuntu*)

|

||||

desired_os=1

|

||||

os="ubuntu"

|

||||

package_manager="apt-get"

|

||||

;;

|

||||

Debian*)

|

||||

desired_os=1

|

||||

os="debian"

|

||||

package_manager="apt-get"

|

||||

;;

|

||||

Red\ Hat*)

|

||||

desired_os=1

|

||||

os="red hat"

|

||||

package_manager="yum"

|

||||

;;

|

||||

CentOS*)

|

||||

desired_os=1

|

||||

os="centos"

|

||||

package_manager="yum"

|

||||

;;

|

||||

*)

|

||||

desired_os=0

|

||||

os="Not Found"

|

||||

esac

|

||||

}

|

||||

|

||||

|

||||

echo_contact_support() {

|

||||

echo "Please contact <support@signoz.io> with your OS details and version${1:-.}"

|

||||

}

|

||||

|

||||

bye() { # Prints a friendly good bye message and exits the script.

|

||||

set +o errexit

|

||||

echo "Please share your email to receive support with the installation"

|

||||

read -rp 'Email: ' email

|

||||

|

||||

while [[ $email == "" ]]

|

||||

do

|

||||

read -rp 'Email: ' email

|

||||

done

|

||||

|

||||

DATA='{ "api_key": "H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w", "type": "capture", "event": "Installation Support", "distinct_id": "'"$SIGNOZ_INSTALLATION_ID"'", "properties": { "os": "'"$os"'", "email": "'"$email"'", "platform": "k8s", "k8s_minor_version": "'"$k8s_minor_version"'" } }'

|

||||

URL="https://app.posthog.com/capture"

|

||||

HEADER="Content-Type: application/json"

|

||||

|

||||

|

||||

if has_curl; then

|

||||

curl -sfL -d "$DATA" --header "$HEADER" "$URL" > /dev/null 2>&1

|

||||

elif has_wget; then

|

||||

wget -q --post-data="$DATA" --header="$HEADER" "$URL" > /dev/null 2>&1

|

||||

fi

|

||||

|

||||

echo -e "\nExiting for now. Bye! \U1F44B\n"

|

||||

exit 1

|

||||

}

|

||||

|

||||

deploy_app() {

|

||||

kubectl apply -f "$install_dir/config-template"

|

||||

kubectl apply -f "$install_dir"

|

||||

}

|

||||

|

||||

wait_for_application_start() {

|

||||

local timeout=$1

|

||||

address=$custom_domain

|

||||

if [[ "$ssl_enable" == "true" ]]; then

|

||||

protocol="https"

|

||||

else

|

||||

protocol="http"

|

||||

fi

|

||||

# The while loop is important because for-loops don't work for dynamic values

|

||||

while [[ $timeout -gt 0 ]]; do

|

||||

if [[ $address == "" || $address == null ]]; then

|

||||

address=`kubectl get ingress appsmith-ingress -o json | jq -r '.status.loadBalancer.ingress[0].ip'`

|

||||

fi

|

||||

status_code="$(curl -s -o /dev/null -w "%{http_code}" $protocol://$address/api/v1 || true)"

|

||||

if [[ status_code -eq 401 ]]; then

|

||||

break

|

||||

else

|

||||

echo -ne "Waiting for all containers to start. This check will timeout in $timeout seconds...\r\c"

|

||||

fi

|

||||

((timeout--))

|

||||

sleep 1

|

||||

done

|

||||

|

||||

echo ""

|

||||

}

|

||||

|

||||

|

||||

echo -e "👋 Thank you for trying out SigNoz! "

|

||||

echo ""

|

||||

|

||||

|

||||

# Checking OS and assigning package manager

|

||||

desired_os=0

|

||||

os=""

|

||||

echo -e "🕵️ Detecting your OS"

|

||||

check_os

|

||||

SIGNOZ_INSTALLATION_ID=$(curl -s 'https://api64.ipify.org')

|

||||

|

||||

# Run bye if failure happens

|

||||

trap bye EXIT

|

||||

|

||||

DATA='{ "api_key": "H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w", "type": "capture", "event": "Installation Started", "distinct_id": "'"$SIGNOZ_INSTALLATION_ID"'", "properties": { "os": "'"$os"'", "platform": "k8s", "k8s_minor_version": "'"$k8s_minor_version"'" } }'

|

||||

|

||||

URL="https://app.posthog.com/capture"

|

||||

HEADER="Content-Type: application/json"

|

||||

|

||||

if has_curl; then

|

||||

curl -sfL -d "$DATA" --header "$HEADER" "$URL" > /dev/null 2>&1

|

||||

elif has_wget; then

|

||||

wget -q --post-data="$DATA" --header="$HEADER" "$URL" > /dev/null 2>&1

|

||||

fi

|

||||

|

||||

# Check for kubernetes setup

|

||||

check_k8s_setup

|

||||

|

||||

echo ""

|

||||

echo "Deploy Appmisth on your cluster"

|

||||

echo ""

|

||||

|

||||

deploy_app

|

||||

|

||||

wait_for_application_start 60

|

||||

|

||||

|

||||

if [[ $status_code -ne 200 ]]; then

|

||||

echo "+++++++++++ ERROR ++++++++++++++++++++++"

|

||||

echo "The containers didn't seem to start correctly. Please run the following command to check containers that may have errored out:"

|

||||

echo ""

|

||||

echo -e "sudo docker-compose -f docker/docker-compose-tiny.yaml ps -a"

|

||||

echo "Please read our troubleshooting guide https://signoz.io/docs/deployment/docker#troubleshooting"

|

||||

echo "or reach us on SigNoz for support https://join.slack.com/t/signoz-community/shared_invite/zt-lrjknbbp-J_mI13rlw8pGF4EWBnorJA"

|

||||

echo "++++++++++++++++++++++++++++++++++++++++"

|

||||

|

||||

SUPERVISORS="$(curl -so - http://localhost:8888/druid/indexer/v1/supervisor)"

|

||||

|

||||

DATASOURCES="$(curl -so - http://localhost:8888/druid/coordinator/v1/datasources)"

|

||||

|

||||

DATA='{ "api_key": "H-htDCae7CR3RV57gUzmol6IAKtm5IMCvbcm_fwnL-w", "type": "capture", "event": "Installation Error - Checks", "distinct_id": "'"$SIGNOZ_INSTALLATION_ID"'", "properties": { "os": "'"$os"'", "platform": "k8s", "error": "Containers not started", "SUPERVISORS": '"$SUPERVISORS"', "DATASOURCES": '"$DATASOURCES"' } }'

|

||||

|

||||

URL="https://app.posthog.com/capture"

|

||||

HEADER="Content-Type: application/json"

|

||||

|

||||

if has_curl; then

|

||||

curl -sfL -d "$DATA" --header "$HEADER" "$URL" > /dev/null 2>&1

|

||||

elif has_wget; then

|

||||

wget -q --post-data="$DATA" --header="$HEADER" "$URL" > /dev/null 2>&1

|

||||

fi

|

||||

|

||||

exit 1

|

||||

|

||||

else

|

||||